When the FakeAV coder(s) fail

As I already wrote in many previous diaries, various FakeAV groups go through a lot of work to make their malware as resilient to legitimate anti-virus programs as possible – both on the server side where they abuse various search engines in order to poison results and get new users to visit their booby trapped sites as well as on the client side, where they constantly modify binaries in order to evade AV detection.

One of the most common ways of making detection more difficult is through packing. However, the authors behind FakeAV use a bunch of other techniques to constantly modify/change their client binaries. They pretty much employ all obfuscation techniques you can think of: anti-disassembly (destroying functions, opaque predicates, long ROP chains ...), anti-emulation, anti-VM, anti-debugging etc. We’ll take a look at last two of these.

Anti-emulation is used to prevent execution of malware (or to change the way it behaves) when it is executed in an emulated environment. The emulated environment can be some kind of a sandbox or, more commonly, isolated environment that is part of a legitimate anti-virus. Today’s AV products almost always use various heuristics in order to detect previously unknown malware. This heuristics is (besides other features) also based on actions that the sample performs in the isolated environment. Basically, the AV program executes the sample in the isolated environment and monitors its activities. If something bad is detected (i.e. the sample dropping something in the C:WindowsSystem32 directory) the AV program can block it and prevent it from infecting the machine.

Authors of malware usually try to detect if they are running in such an isolated environment by calling “weird” functions. FakeAV, for example, calls some of the following: LCMapStringA, GetFontData, GetKeyState, GetFileType, GetParent. The idea here is to call a function that the isolated environment (hopefully for the author) has not implemented properly and to detect that the return code is incorrect. As there are thousands of functions in the Windows API it’s impossible for the AV program to correctly implement all functions (although they take good care of those commonly used by malware). It’s a cat and mouse game.

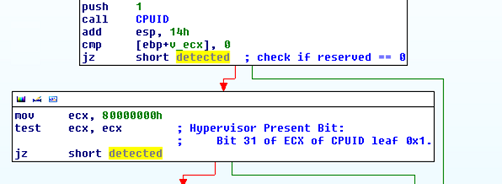

Besides such artificial isolated environments, the malware authors (including the guys behind FakeAV) don’t like when their malware is being executed on virtual systems such as VMWare or VirtualPC or under real, hardware Hypervisors. The FakeAV used quite a bit of well known code to detect various virtual systems. One of the tests they use is the CPUID instruction. The CPUID instruction is a very useful instruction since both Intel and AMD CPUs have reserved bit 31 of ECX of CPUID leaf 0x1 as the hypervisor present bit. This allows applications to check if they are running in a guest (virtual) system by calling the CPUID instruction with EAX set to 0x1 and then checking bit 31 of ECX. If it is set, the application is running in a virtual system. This is what the FakeAV authors do as shown in the following picture:

Or … they failed (like, epic)? Check the picture above carefully. So, the bit 31 of ECX has to be set in order to indicate that we are in a virtual machine. What did the FakeAV author do? After calling the CPUID instruction, instead of checking the value of ECX against 0x80000000 (the 31st bit), the author overwrites ECX with this value and then check’s its own value against itself. This test always returns 0 so the FakeAV author fails on detecting if the program is in a virtual machine, even if the Hypervisor honestly set the 31st bit. And there are more failures in the code later ….

Before I end this diary, I’d like to congratulate my colleague Branko on winning the Hex-Rays’ IDA Pro plugin contest (http://www.hex-rays.com/contest2011/) with Optimice (http://code.google.com/p/optimice/). Of course, congratulations to Jennifer Baldwin from the University of Victoria for the Tracks plugin which looks very cool.

--

Bojan

INFIGO IS

| Web App Penetration Testing and Ethical Hacking | London | Mar 2nd - Mar 7th 2026 |

Comments

Jonathan

Jul 25th 2011

1 decade ago

Ryan

Jul 25th 2011

1 decade ago

Alex

Jul 25th 2011

1 decade ago

Rest assured, plenty of other examples where it would really help the bad guys will not be posted :)

Bojan

Jul 25th 2011

1 decade ago

What happens in general when the malware detects that it's running in a sandbox, under a hypervisor, etc. Does it then attempt workarounds, or does it abort quietly?

I (personally) don't attribute enough sophistication to the blackhats that they would be thinking far enough ahead to quietly abort - in order to not potentially alert the user to a problem.

"We want the the sheep that click unknown links to keep doing so - maybe our next exploit next week will get him"?

Just thiking (and trying to learn) :-)

lurk

Jul 26th 2011

1 decade ago

Steven

Jul 26th 2011

1 decade ago

Once they detect they are in a VM they just quietly exit and don't do anything - at least with this sample I analyzed.

This is quite common in malware, in some cases the bad guys even do some benign activities in order to fool automated analysis programs.

@Steven - quite possible :) Or he simply made a mistake :)

Bojan

Jul 26th 2011

1 decade ago