Running Snort on VMWare ESXi

This is a guest diary by Basil Alawi

One of the challenges that face security administrators is deploying IDS in modern network infrastructure. Unlike hubs, switches doesn't forward every packet to every port in the switch. SPAN port or network TAPS can be used as a workaround in the switched environment.

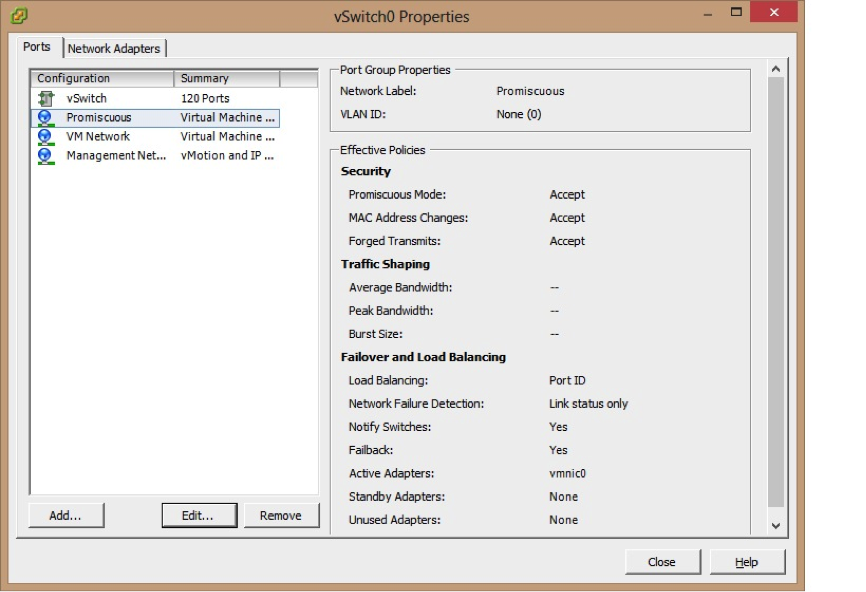

Fortunately with Vmware ESX/ESXi infrastructure we can configure a group of ports to see all network traffic traversing the virtual switch.

"Promiscuous mode is a security policy which can be defined at the virtual switch or portgroup level in vSphere ESX/ESXi. A virtual machine, Service Console or VMkernel network interface in a portgroup which allows use of promiscuous mode can see all network traffic traversing the virtual switch".

By default promiscuous mode policy is set to reject.

To enable promiscuous mode:

- Log into the ESXi/ESX host or vCenter Server using the vSphere Client.

- Select the ESXi/ESX host in the inventory.

- Click the Configuration tab.

- In the Hardware section, click Networking.

- Click Properties of the virtual switch for which you want to enable promiscuous mode.

- Select the virtual switch or portgroup you wish to modify and click Edit.

- Click the Security tab.

- From the Promiscuous Mode dropdown menu, click Accept.

Performance issues:

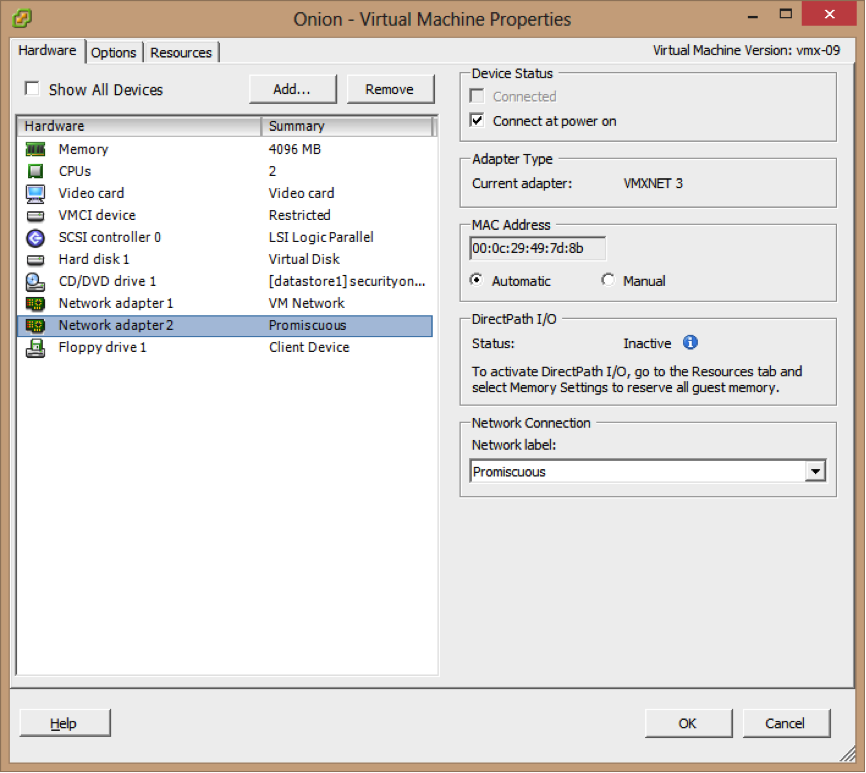

Using VMXNET 3 vNIC will provide better performance enhancement than other vNIC types.(Figure 1)

Figure 1(VMXNET 3)

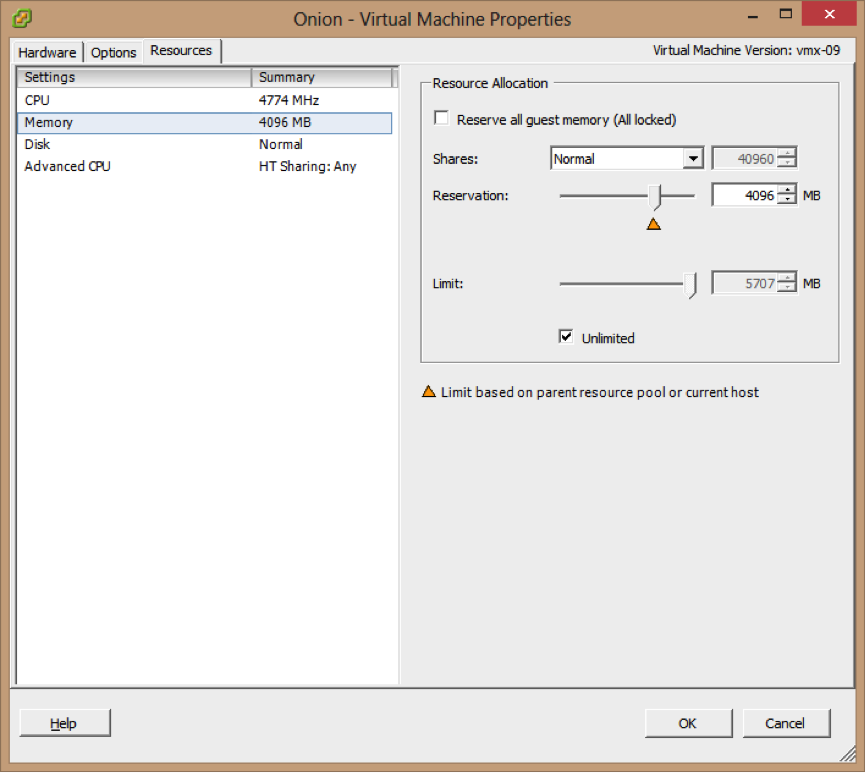

Reserving memory and CPU resources is highly recommended to make sure that the resource will be available when it’s needed. (Figure 2)

Figure 2(Reserved Memory)

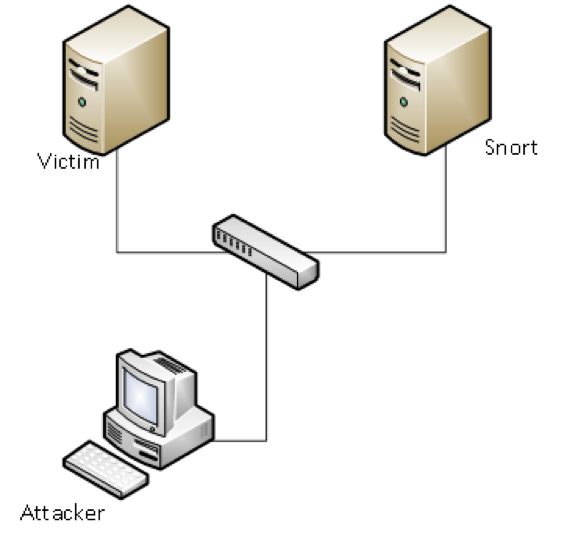

The test lab setup

The test lab consists of Vmware ESXi , Kali Linux, Security Onion and Metaspoitable. ESXi 5.1 will be the host system and Kali VM will be the attack server, while Metaspoitable will be the victim and Security Onion will run the snort instance.(See Figure 3)

Figure 3 (Test Lab)

Test Lab Network Diagram

The Network Configuration

For this experiment the vswicth has been configured with two ports groups. Virtual Machines port group which the default promiscuous mode is set to the default value "Reject" .The second port group is Promiscuous which the promiscuous mode is set to "Accept" ( See Figure 4)

Figure 4 (Vswitch Configuration)

The Security Onion has been configured with two network interfaces, eth0 for management with IP 192.168.207.12 and eth1 without IP address. eth0 is connect to the "VM Network" port group and eth1 is connected to the "Promiscuous" port group.

Testing Snort:

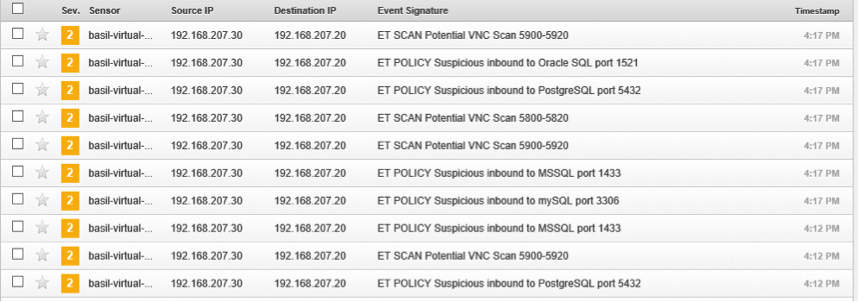

The first test is scanning the metaspoitable vm with NMAP by running and snort detected this attempted successfully. (Figure 5)

|

nmap 192.168.207.20 |

Figure 5 (Snort alerts for Nmap scans)

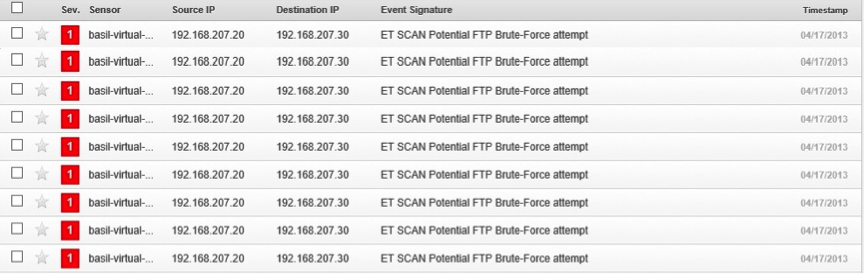

The second test is trying to brute forcing metaspoitable root password using hydra (Figure 6):

|

hydra –l root –P passwordslist.txt 192.168.207.20 ftp |

Figure 6 (Hydra brute force alerts)

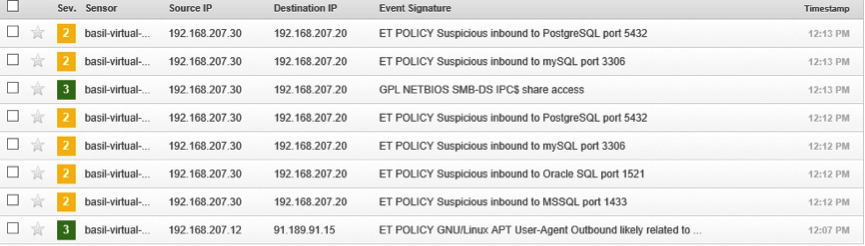

The third attempt was using metasploit to exploit metaspoitable (See Figure 7).

Figure 7 (metasploit alerts)

| Application Security: Securing Web Apps, APIs, and Microservices | Orlando | Mar 29th - Apr 3rd 2026 |

Comments

running an extra VM on every host can be quite an administrative mess. An in-hypervisor solution is more

efficient. To get all the traffic in a distributed environment; this will probably require use of the

distributed virtual switch with port mirroring or SPAN capability of the Nexus 1000V or the likes of a

commercial solution such as Phantom Virtual Tap: in order to accomplish monitoring of multiple port

groups and ESXi hosts, without an extra snort vm for every host in the environment....

"Reserving memory and CPU resources is highly recommended to make sure that the resource will be available"

I strongly discourage setting any reservation values on any individual VM; this can be highly cumbersome to manage.

In case the VM gets vMotioned, these "reservation changes" can be lost.

Reservations are used to ensure that SLAs continue to be met during periods of resource contention, and therefore,

the implementation of large reservations has a potentially high cost for the business in case of contention,

especially 4 CPU Megacycles, that Snort probably does not require.

If there is memory contention, also, there is no reason Snort should be excluded from the ballooning or memory pressure:

that reservation could cause other workloads to be forced to Swap, even if Snort is not requiring that memory.

It is best practice that reservations should be set through the resource allocation tab of the cluster or parent resource pool;

after obtaining the requirements from the business,

and the reservations should be the minimum value required to meet the requirements.

These effect cluster failover capacity as well, and could mean that the Tier1 database VM or Exchange server isn't allowed to power on, due to clashing reservations.

I recommend to always be cautious with setting large reservations or setting limits, number of vCPUs, or shares on an individual VM.

Mysid

May 30th 2013

1 decade ago

AndrewB

Jun 3rd 2013

1 decade ago

AndrewB

May 21st 2018

7 years ago