The Dark Side of Certificate Transparency

I am a big fan of the idea behind Certificate Transparency [1]. The real problem with SSL (and TLS... it really doesn't matter for this discussion) is not the weak ciphers or subtle issues with algorithms (yes, you should still fix it), but the certificate authority trust model. It has been too easy in the past to obtain a fraudulent certificate [2]. There was little accountability when it came to certificate authorities issuing test certificates, or just messing up, and validating the wrong user for a domain based on web application bugs or social engineering [3][4].

With certificate transparency, participating certificate authorities will publish all certificates they issue in a log. The log is public, so you can search if someone received a certificate for a domain name you manage.

You can search certificate transparency logs published by various CAs directly, or you can use one of the search engines that already collect the logs and use them to search [1].

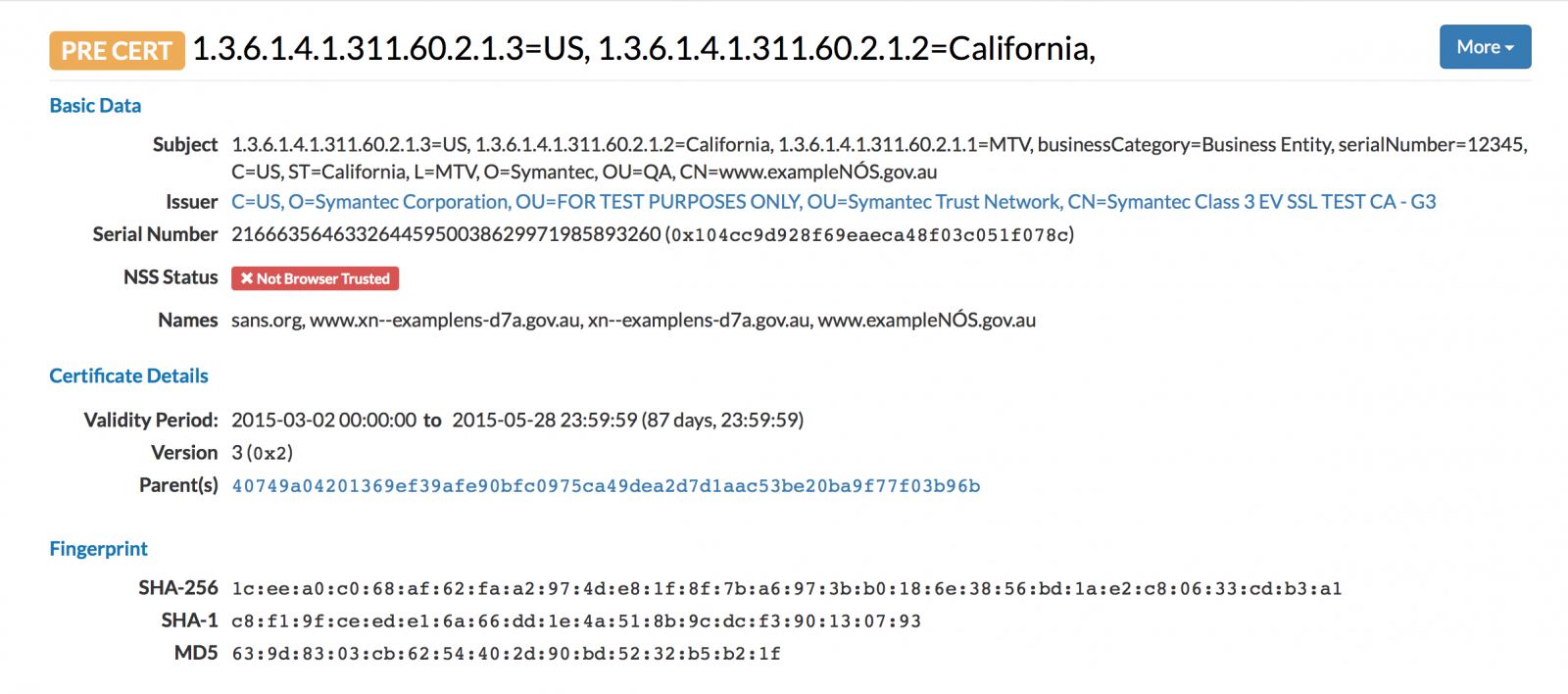

For example, here is an "interesting" certificate for "sans.org", issued by the infamous Symantec test CA [5].

So what is the "dark side" part?

Many organizations obtain certificates for internal host names that they do not necessarily want to be known. In the past, internet wide scans did catalog TLS certificates, but they only indexed certificates on publicly reachable sites. With certificate transparency, names may leak that are intended for internal use only. Here are a few interesting CNs I found:

vpn.miltonsandfordwines.com upstest2.managehr.com mail.backup-technology.co.uk

To find these, I just searched one of the Certificate Transparancy Log search engine, crt.sh , for "internal".

There are currently two options considered to "fix" this problem:

- Allow customers to opt out of having their certificate logged. This is a bad idea. Malicious customer would certainly opt out.

- Redact sub-domain information from the log. This is currently in the works, but the current standard doesn't support this yet. In the long run, this appears to be a better option.

But you should certainly regularly search certificate transparency logs (or any scans for SSL certificates) for your domain name to detect abuse or leakage of internal information.

[1] https://www.certificate-transparency.org

[2] https://security.googleblog.com/2015/03/maintaining-digital-certificate-security.html

[3] http://oalmanna.blogspot.ro/2016/03/startssl-domain-validation.html

[4] https://thehackerblog.com/keeping-positive-obtaining-arbitrary-wildcard-ssl-certificates-from-comodo-via-dangling-markup-injection/index.html

[5] https://censys.io/certificates/1ceea0c068af62faa2974de81f8f7ba6973bb0186e3856bd1ae2c80633cdb3a1

| Application Security: Securing Web Apps, APIs, and Microservices | Orlando | Mar 29th - Apr 3rd 2026 |

Comments

thank you for alerting me to this issue. my thoughts are that we are seeing an exponential breakdown of trust in centralised certificate generation. thus we have a clear demonstration - another one - that centralised control is simply not going to work.

have you seen this https://github.com/okTurtles/dnschain which i believe would be a much more robust solution, and has, at its core, the transparency that you're advocating.

Anonymous

Aug 6th 2016

9 years ago

https://scans.io/study/sonar.fdns

For enumerating all hostnames associated with a domain, this data has items missing from dnsdumpster.com.

Anonymous

Sep 2nd 2016

9 years ago

For those looking to do in depth information gathering campaigns against a target I recommend having local copies of whatever public data sets you can get your hands on. Start with scans.io, censys.io and certificate transparency logs.

Anonymous

Sep 21st 2017

8 years ago