Example of Getting Analysts & Researchers Away

It is well-known that bad guys implement pieces of code to defeat security analysts and researchers. Modern malware's have VM evasion techniques to detect as soon as possible if they are executed in a sandbox environment. The same applies for web services like phishing pages or C&C control panels.

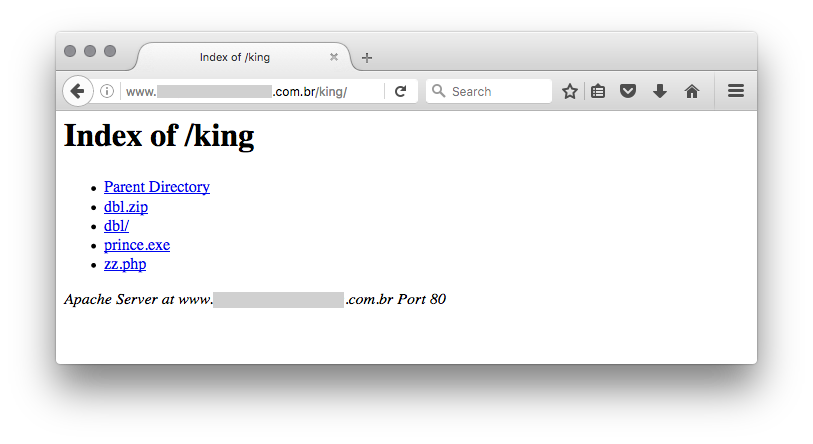

Yesterday, I found a website delivering a malicious PE file. The URL was http://www.[redacted].com/king/prince.exe. This PE file was downloaded and executed by a malicious Office document. Nothing special here, it's a classic attack scenario. Usually, when I receive a URL like this one, I'm always trying to access the upper directory indexes and also some usual filenames / directories (I built and maintain my own dictionary for this purpose). Playing active-defense may help you to get more knowledge about the attacker. And this time, I was lucky:

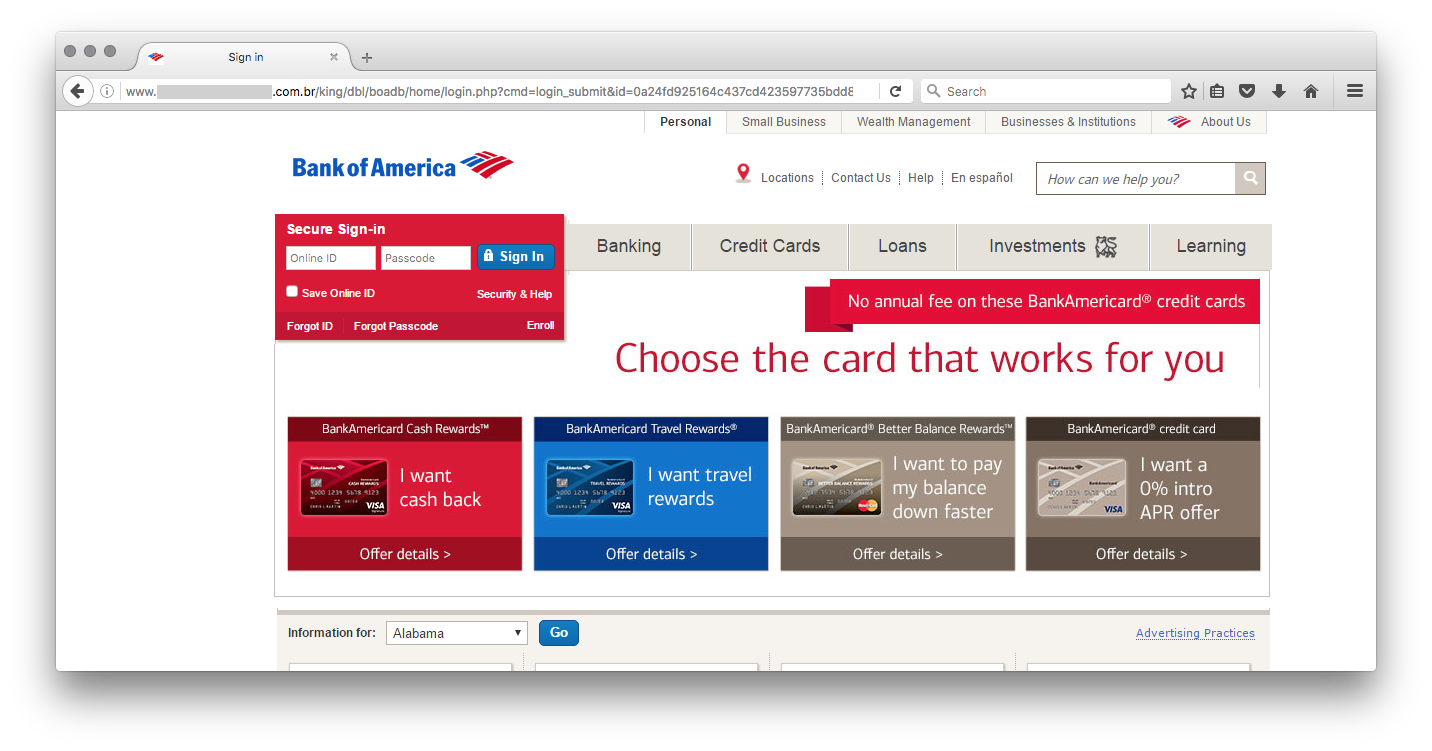

The file 'zz.php' is less interesting, it's a simple PHP mailer. The 'dbl' directory contains interesting pages that provide a fake Bank of America website:

In this case, attackers made another mistake, the source code of the phishing site was left on the server in the 'dbl.zip' file. Once downloaded and analyzed, it revealed a classic attack trying to lure visitors and collect credentials. Note that the attacker was identified via his gmail.com address present in the scripts. But the most interesting file is called 'blocker.php' and is included at the beginning of the index.php file:

...

include('blocker.php');

...

Let's have a look at this file. It performs several checks based on the visitor's details (IP and browser).

First of all, it performs a reverse lookup of the visitor's IP address and searches for interesting string:

$hostname = gethostbyaddr($_SERVER['REMOTE_ADDR']);

$blocked_words = array("above","google","softlayer","amazonaws","cyveillance","phishtank","dreamhost","netpilot","calyxinstitute","tor-exit", "paypal");

foreach($blocked_words as $word) {

if (substr_count($hostname, $word) > 0) {

header("HTTP/1.0 404 Not Found");

}

}

Next, the visitor's IP address is checked against in a very long list of prefixes (redacted):

$bannedIP = array( [redacted] );

if(in_array($_SERVER['REMOTE_ADDR'],$bannedIP)) {

header('HTTP/1.0 404 Not Found');

exit();

} else {

foreach($bannedIP as $ip) {

if(preg_match('/' . $ip . '/',$_SERVER['REMOTE_ADDR'])){

header('HTTP/1.0 404 Not Found');

}

}

}

Here is the list of more relevant banned network:

- Digital Ocean

- Cogent

- Internet Systems Consortium

- Amazon

- Datapipe

- DoD Network Information Center

- Omnico Hosting

- Comverse Network Systems

- USAA

- RCP HHES

- Postini

- FDC Servers

- SoftLayer Technologies

- AppNexus

- CYBERCON

- Quality Technology Services

- Netvision

- Netcraft Scandinavia

- GlobalIP-Net

- China Unicom

- Exalead

- Comverse

- Chungam National University

And the last check is based on the User-Agent:

if(strpos($_SERVER['HTTP_USER_AGENT'], 'google') or strpos($_SERVER['HTTP_USER_AGENT'], 'msnbot') or strpos($_SERVER['HTTP_USER_AGENT'], 'Yahoo! Slurp') or strpos($_SERVER['HTTP_USER_AGENT'], 'YahooSeeker') or strpos($_SERVER['HTTP_USER_AGENT'], 'Googlebot') or strpos($_SERVER['HTTP_USER_AGENT'], 'bingbot') or strpos($_SERVER['HTTP_USER_AGENT'], 'crawler') or strpos($_SERVER['HTTP_USER_AGENT'], 'PycURL') or strpos($_SERVER['HTTP_USER_AGENT'], 'facebookexternalhit') !== false) { header('HTTP/1.0 404 Not Found'); exit; }

Surprisingly, this last checks is basic and, often, User-Agents from tools or frameworks are also banned. Like:

Wget/1.13.4 (linux-gnu) curl/7.15.5 (x86_64-redhat-linux-gnu) libcurl/7.15.5 OpenSSL/0.9.8b zlib/1.2.3 libidn/0.6.5 python-requests/2.9.1 Python-urllib/2.7 Java/1.8.0_111 ...

Many ranges of IP addresses belongs to hosting companies. Many researchers use VPS and servers located there, that's why they are banned. In the same way, interesting targets for the phishing page are residential customers of the bank, connected via classic big ISP's.

Conclusion: if you are hunting for malicious code / sites, use an anonymous IP address (a residential DSL line or cable is top) and be sure to use the right User-Agents to mimic "classic" targets.

Xavier Mertens (@xme)

ISC Handler - Freelance Security Consultant

PGP Key

| Reverse-Engineering Malware: Advanced Code Analysis | Amsterdam | Mar 16th - Mar 20th 2026 |

Comments

Anonymous

Nov 17th 2016

9 years ago

myipaddress.com etc etc and give them a bogus address. Although I honestly dont understand why these malwares use this method for address retrieval.

Anonymous

Nov 19th 2016

9 years ago

Anonymous

Nov 19th 2016

9 years ago