Profiling SSL Clients with tshark

Cisco recently published a paper showing how malicious SSL traffic sometimes uses very specific SSL options. Once you know what set of SSL options to look for, you will then be able to identify individual pieces of malware without having to decrypt the SSL traffic.

(and before anybody complains: SSL does include TLS. I am just old fashioned that way)

I wanted to see how well this applies to HTTPS traffic hitting the ISC website. I collected about 100 MB of traffic, which covered client hello requests from about 719 different IP addresses.

There are a couple of parameters I looked at:

- Use of the SNI field. All reasonably modern browsers use this field.

- Number of Ciphers used. I could look at individual ciphers, but the size of the ciphers offered field was easier to investigate

- The total size of all extensions. Again simpler than looking at individual extensions. I subtracted the size fo the SNI option to eliminate variability due to different host names we use.

First the SNI option. I used the following tshark command to extract the size of the SNI option:

tshark -r pcap -n -Y 'ssl.handshake.type==1' -T fields -e ssl.handshake.extensions_server_name_list_len | sort -n | uniq -c

Here is the result broken down by hostnames:

| Frequency | Hostname |

| 400 | No SNI Option |

| 5 | dshield.org |

| 1281 | isc.sans.edu / isc.sans.org |

| 1 | incidents.org |

| 58 | www.dshield.org |

| 5 | feeds.dshield.org |

| 29 | secure.dshield.org |

I was a bit surprised by the high number of hits without SNI. So I took a closer look at them.

Next step: Find out who isn't using SNI.

tshark -r pcap -n -Y 'ssl.handshake.type==1 && ! ssl.handshake.extensions_server_name_list_len' -T fields -e ip.src

One issue I ran into is that some of the requests to our site went through proxies. Proxies terminate the SSL connection, and the options observed do not depend on the web browser, but the client. The top user agents I saw without SNI:

- BING: Looks like BING doesn't support SNI yet. This is kind of odd for a major search engine.

- Some obviously fake user agents. They claim to be a modern browser, but for example only hit one particular URL over and over.

- Nagios, the monitoring software (no surprise)

- Python-urllib. Again, no surprise

- wget

- various RSS feed and Podcast clients

With the exception of "Bing", all of these are "good to identify". For our site, we do not mind scripting against our API which explains the large numbers of requests from clients like Python. Some never sent any HTTP requests, so I assume they are just SSL fingerprinting attempts. In particular, the once that used the heartbeat option on the client hello ;-)

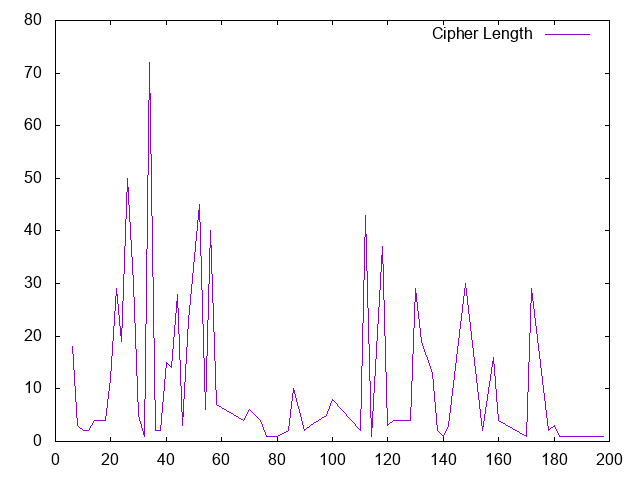

Next, let's look at the number of ciphers offered by the client.

tshark -r pcap -n -Y 'ssl.handshake.type==1' -T fields -e ip.src -e ssl.handshake.cipher_suites_length | sort -u | cut -f2 | sort -n | uniq -c > cipherlength

Again we get a very diverse picture. I expected more pronounced "peaks", but looks like we got a wide range of possible "right" answers. "34" (which implies 17 different ciphers) appears to be the most common answer followed by 26 and 52. But lets look at some of the outliers again:

The larges number of ciphers was 99 (cipher length of 198). This request came from 54.213.123.74, which resolves to gw.dlvr.it.

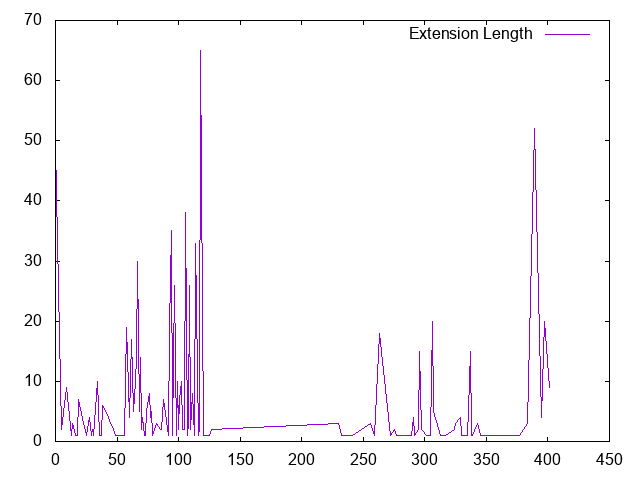

Finally, lets get te total length of the client hello extensions (cipher suites are not included) and subtract the length of the SNI option for its variability:

Again, we get a fairly wide distribution. Looks a bit like the cipher length. There some client that didn't appear to use any extensions, which is probably the outlier to look at here first.

Among the user agents not using any SSL extensions, the scripts stick out again (nagios, wget, curl and the like)

In conclusion:

I think this does deserve more study. I only had a couple hours today to work on this. I think for a workstation networks, watching SSL options will certainly make for a nice "backup" to monitoring user agents, in particular if you can't intercept user agents for SSL. For a server, it comes down to how much you can "tighten up" the range of clients you allow to access your web applications. In a DoS situation, this may be a nice way to filter an attack, and you should certainly look for anomalies.

Comments